Arcee Cloud: The LLM Solution for Everyone

We’re thrilled to announce that we’re launching Arcee Cloud, a fully hosted SaaS offering that makes world-class LLM production accessible to all – in an easy-to-use platform for Training, Merging, and Deploying custom language models.

Arcee Cloud is the future of LLMs.

We know that’s a bold statement, but we say it with full confidence–because we’re keenly aware of the many pain points involved in training and productionalizing language models, and we’ve built a platform that effectively eliminates the biggest challenges.

First–what exactly is Arcee Cloud? It’s a fully hosted SaaS offering that our team here at Arcee.AI is dropping at the end of June. It consists of an end-to-end platform in which a user:

- Uploads their dataset

- Trains a model using a selected Open Source model as a base, and does one or more (or all) of these steps:

- Continual Pre-Training (CPT)

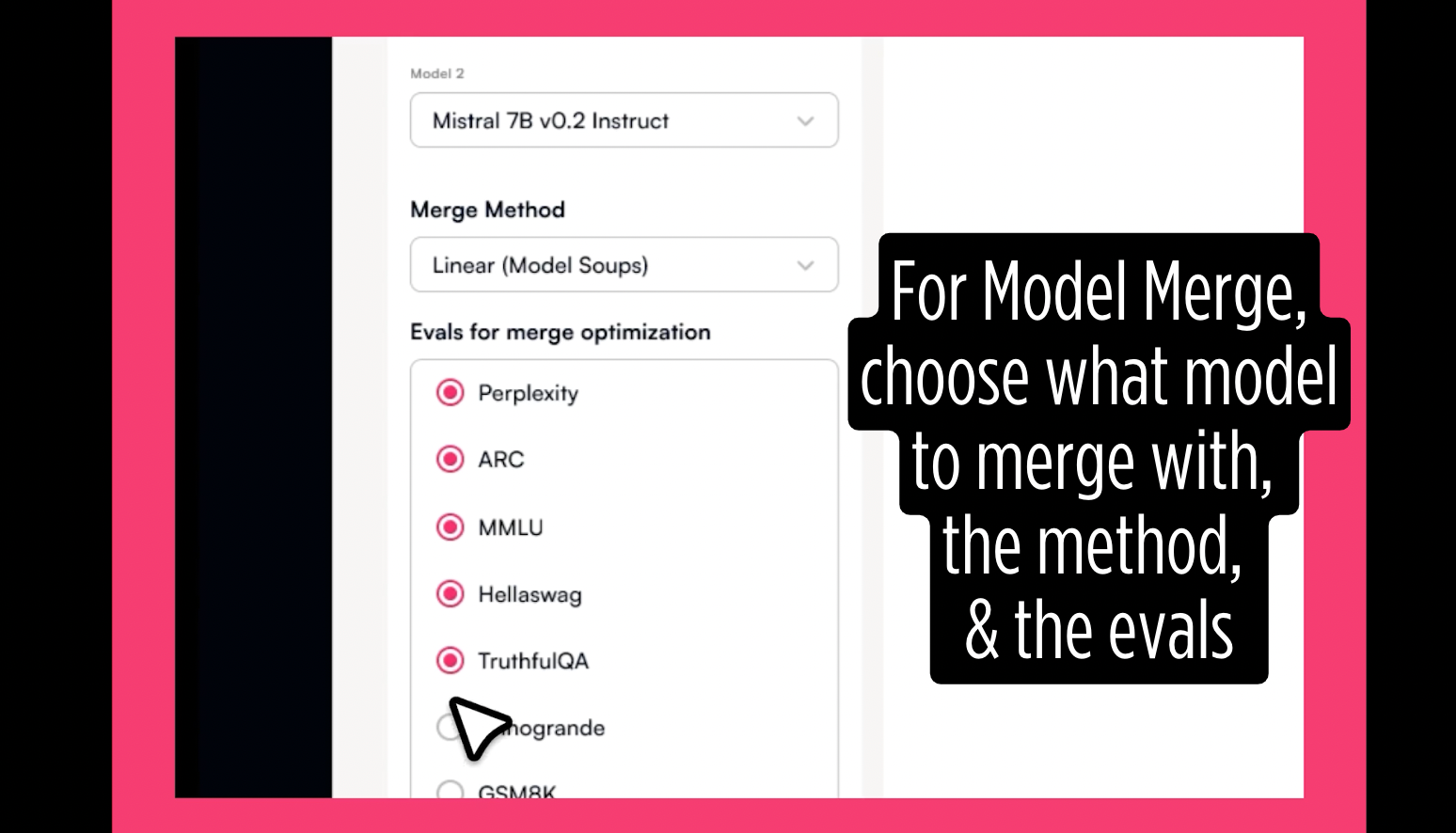

- Model Merging

- Supervised Fine-Tuning (SFT)

- Direct Preference Optimization (DPO)

- Deploys their model.

Available as both a UI and a full SDK, the platform is easy to use for both technical and non-technical audiences. Most importantly, our training techniques are so advanced that they result in compute savings of up to 90%. Remarkably, we get this efficiency without sacrificing accuracy; on the contrary, our current Arcee.AI customers report boosted performance on internal benchmarks of 20-30%.

So, how do we pull this off? You could say that the pillar of our platform is model merging, which enables you to efficiently train your own domain-specific model, on your own data, then use the greatness of another already-trained Open Source checkpoint with your model. This opens up endless possibilities when it comes to bringing new knowledge into your model, without the need to re-train.

Model merging itself seems somewhat magical, and becomes even more so when it’s paired with the other training steps we offer in the platform (CPT, SFT, DPO).

Arcee.AI’s journey: from pioneering Model Merging, to building Arcee Cloud

You may be wondering – how is Arcee.AI so darn good at model merging? Well, we committed to it very early on.

Early this year – soon after the launch of the Open Source model merging toolkit MergeKit – Arcee recognized that the technique was going to take the LLM world by storm. We quickly joined forces with MergeKit (and along with its founder, Charles Goddard, we maintain a firm commitment to keeping it open source).

Our instincts about the importance of model merging have proven to be spot-on. If you have any doubt about how quickly it’s catching on, just look at the Hugging Face Leaderboard – where a majority of the top models have used MergeKit.

We recently extended model merging by releasing evolutionary model merging and open-sourcing this state-of-the-art technique to the community through MergeKit.

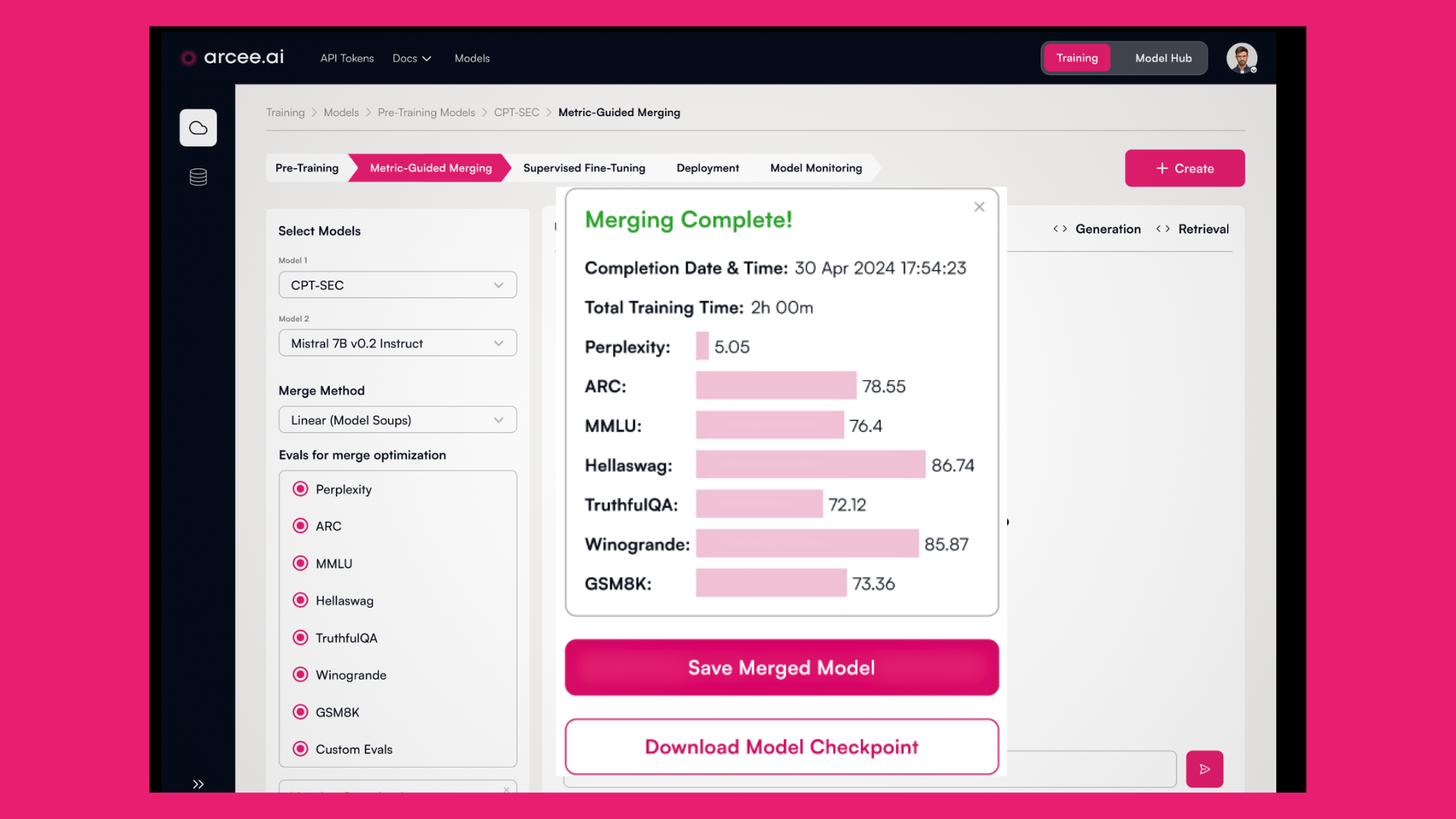

Evolutionary model merging removes the human element from the endless different merging scenarios that can be implemented– putting the merging in the hands of the model itself. You provide the model with a list of evals you want to optimize the merge for, then the model does an eval > merge > eval > merge over and over until the most optimal merging parameters are recognized by the model. Think of it as base merging on steroids.

While our team of researchers and engineers has been going deep on model merging, we’ve also maintained a focus on helping the community to merge more effectively and easily. We recently created our base model merging and merge config file generator Hugging Face Spaces. In just the first few weeks, we’ve seen some 10,000 people play with model merging through these simple UIs.

These spaces, however, don’t allow the user to do any pre-training or fine-tuning… For that you need our core Arcee product, which consists of an easy-to-use GUI and includes APIs to do merging as well as CPT, SFT, and DPO (all run inside a customer’s VPC).

Now, with the launch of Arcee Cloud, we're making our state-of-the-art training and merging process significantly more accessible. In addition to offering it as an in-VPC product, we are now also providing it as a Hosted SaaS solution. This expansion ensures that the Arcee platform is more seamless and accessible than ever before. For those without stringent data requirements for in-VPC deployment, Arcee Cloud enables you to transform your dataset into a trained model within days. Furthermore, we have introduced the capability for you to download the trained model and deploy it to any cloud or platform of your choice.

Model merging & Arcee Cloud: A revolution in LLM development

With Arcee Cloud, you can go from dataset to deployment of a highly-accurate LLM trained on your domain and your data – in a matter of days, not weeks or months. This is game-changing when it comes to getting state-of-the-art custom language models into production and getting value from your GenAI investments. We’re excited for you to join us on our journey. Sign up here and we'll be in touch on launch day!