What is an SLM (Small Language Model)?

The world of LLMs (Large Language Models) has cooked up a storm in recent years, with the rise of OpenAI’s GPT and the increasing proliferation of open source language models. Much excitement abounds, and virtually everyone and their grandma are mesmerized by the fact that a chat-based LLM can recite Shakespearean sonnets or even make up new haikus. For the more technically savvy crowd, Github’s co-pilot is no stranger to many of us, and increasingly, countless established B2C and B2B brands are also exploring incorporating AI into their product suite.

But as many companies we’ve been speaking to have become acutely aware, the promise of general purpose LLMs is not as it seems. There are questions around efficiency, accuracy, and use case specificity, not to mention the risk of data leakage, that need to be addressed. And this is precisely what we at Arcee believe where SLMs will play an important role. Let’s take a look at what exactly an SLM is in the following sections.

Why the future lies with small, more specialized language models

To talk about the benefits of SLMs, we first need to visit the drawbacks of LLMs. Here are some of the problems we have witnessed first hand in working with enterprises looking to build their own language models:

Hallucination

Because general purpose and many closed source LLMs are trained on a massive corpus of data that spans from public court documents all the way to Reddit posts and Hemingway novels, outputs from any given prompt using LLMs can run into issues of hallucination due to lack of context and accurate retrieval.

Cost of training models

Training use case specific models using LLMs that weren’t built for purpose tends to rack up cloud bills that are disproportionately outsized.

Fragmented toolstack

Another core challenge companies run into when looking to train their own models with LLMs is the fragmentation of toolstack needed to make everything a reality. There isn’t a one-stop solution for companies to reliably train and deploy their

Privacy and compliance concerns with data sharing

Many existing solutions for building custom LLMs are offered through third-party hosted cloud services. Companies who have proprietary data to leverage for their own models never really want to share their data with a third party. This makes 99% of current solutions unsuitable for enterprise needs.

Closed source solutions not reliable for core IP development

Having core IP strategies based on general APIs that your competitors share is not a smart single node strategy to take. Third party providers can choose to make drastic changes to the underlying models which affect performance on their own terms.

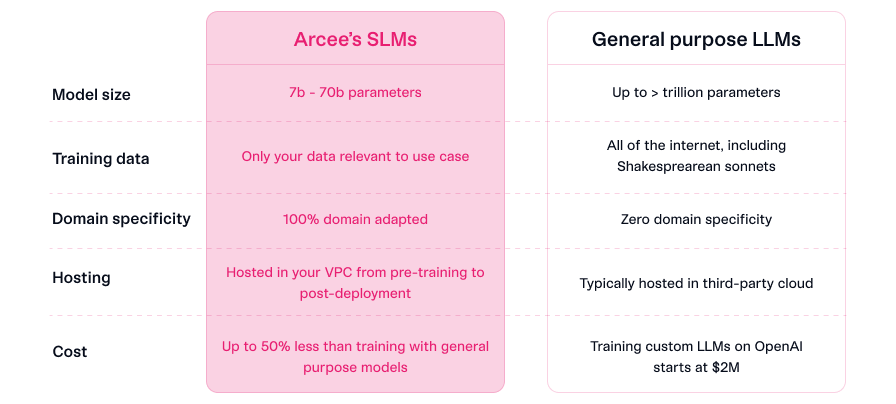

With these concerns spelled out, it becomes clear that for most businesses with specific internal and external use cases, using closed source LLMs and general purpose LLMs will not suffice. In light of this, what we call Small Language Models (SLMs), are a much more suitable option for 95%+ of use cases.

There are several reasons behind this. First, SLMs do not require a large corpus of training data, significantly cutting down compute cost and training time needed compared to using LLMs. Second, SLMs are trained on only relevant data, reducing hallucination and increasing accuracy in output. Furthermore, SLMs, if trained and deployed through Arcee’s proprietary in-VPC adaptation system, ensure 100% data retention within the four walls of a company.

How Arcee is pioneering SLMs

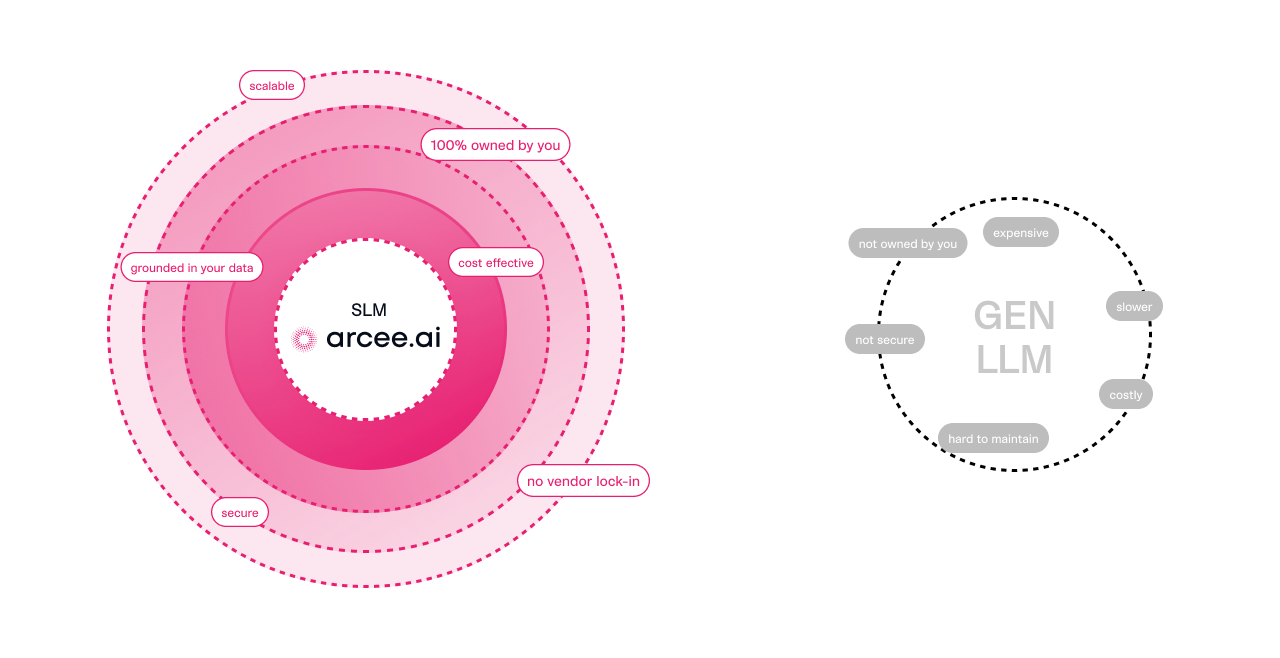

At Arcee, we believe that SLMs stand for not just small language models, but also specialized, secure, and scalable models. Here’s what we mean and how we achieve that for our customers:

Small language models

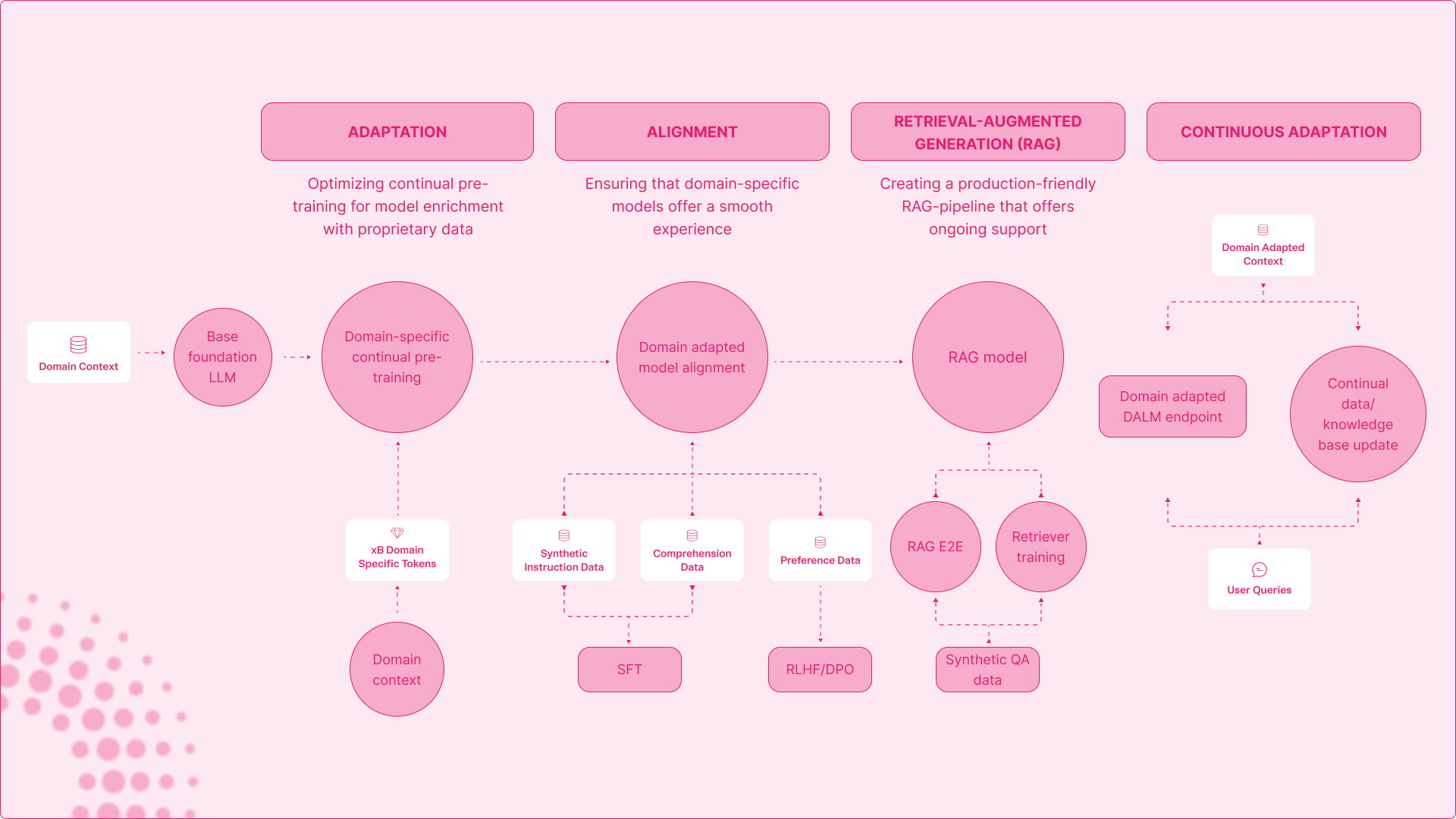

Training your data on LLMs, as discussed above, can be inefficient both in terms of cost and time. Arcee’s DALM (domain adaptation language model) has a unique approach that pre-trains a use case specific model based on the data and context you provide, so you can deploy and continuously improve your own SLM. Because you are only training your SLM with your data, the number of parameters involved in training the model is drastically reduced (7b - 70b parameters), leading to much quicker time to value and higher cost efficiency without compromising quality.

Specialized language models

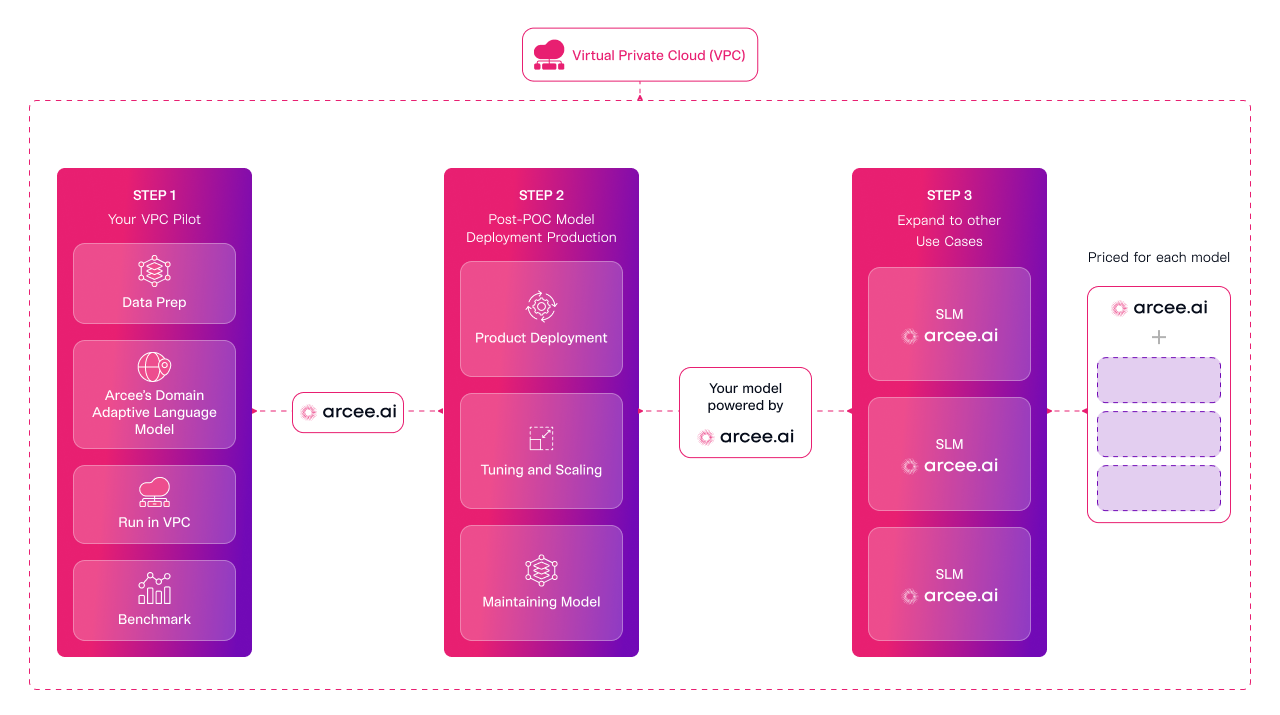

For each use case, you want your SLM to be adapted to and focused on the right context. This is why specialized language models, custom trained and built to cater to one use case/need, is the right approach to building your SLMs. Arcee makes this possible through our unique end-to-end SLM adaptation system, where we offer pre-training, alignment, RAG (Retrieval-Augmented Generation) all in one place. This is also critical to building your own IP as our unique system allows you to use any base model as time progresses, mitigating the risks involved with vendor lock-in.

Secure language models

One of the key concerns of companies we speak to is around security and data privacy. Arcee is the only custom SLM solution that works 100% in your own environment through in-VPC deployment. This means there is no data leakage at any point of your model training journey with Arcee, all the way from pre-training to deployment to continuous adaptation.

Scalable language models

There is no particularly good reason why an SLM built for compliance should be bloated into also being a customer success SLM. With Arcee’s E2E adaptation system, companies will be able to train and deploy SLMs for an infinite number of use cases at a fraction of the cost.

In a nutshell, at Arcee, SLMs stand for the future of applied NLP, where size, specialization, security, and scalability all play a part. As we said previously in this post, we believe in a future where companies are empowered by small and specialized models that are purpose built for specific use cases, and our unique end-to-end adaptation system has already delivered this promise to early adopters, and will continue to serve as many enterprises looking to build and own their SLMs.

If building SLMs sounds exciting to you, we’d love to hear from you and discuss how Arcee can help your business build SLM assets right in your own cloud.

We are at the beginning of something big, and have garnered tremendous interest from large enterprises across the insurance, financial services, GovTech, and automobile industries as early design partners, and we’d love to welcome more conversations. Get in touch now!