Our Series A, Julien Simon Joins the Team, & Arcee Cloud Goes Live

Less than a year after emerging from stealth, Arcee AI has hit the headlines – announcing a major Series A, the arrival of Chief Evangelist Julien Simon, and the launch of a new cloud platform.

In the world of Small Language Models, it’s safe to say that it’s been a BIG week.

🚀 First, we’re proud to announce our $24 million Series A.

Emergence Capital led the round, which also included seed investors Long Journey Ventures, Scott Banister, Flybridge, Centre Street Partners, and new investor Arcadia Capital.

This round represents our next step in bringing Small Language Models (#SLMs), powered by Model Merging and Spectrum, to as many organizations as possible.

You can read more about our vision in this VentureBeat exclusive featuring an interview with our Co-Founder / CEO Mark McQuade.

🔥 Second, we have a new Chief Evangelist.

Julien Simon has been one of the most highly-respected global #AI voices for more than a decade, and his decision to jump ship from Hugging Face to Arcee AI sums up everything you need to know about where the #GenAI market is headed.

We know that SLMs are the future. Julien knows it, too – and you can hear from him live in this Friday’s special edition of our Small Language Model Show.

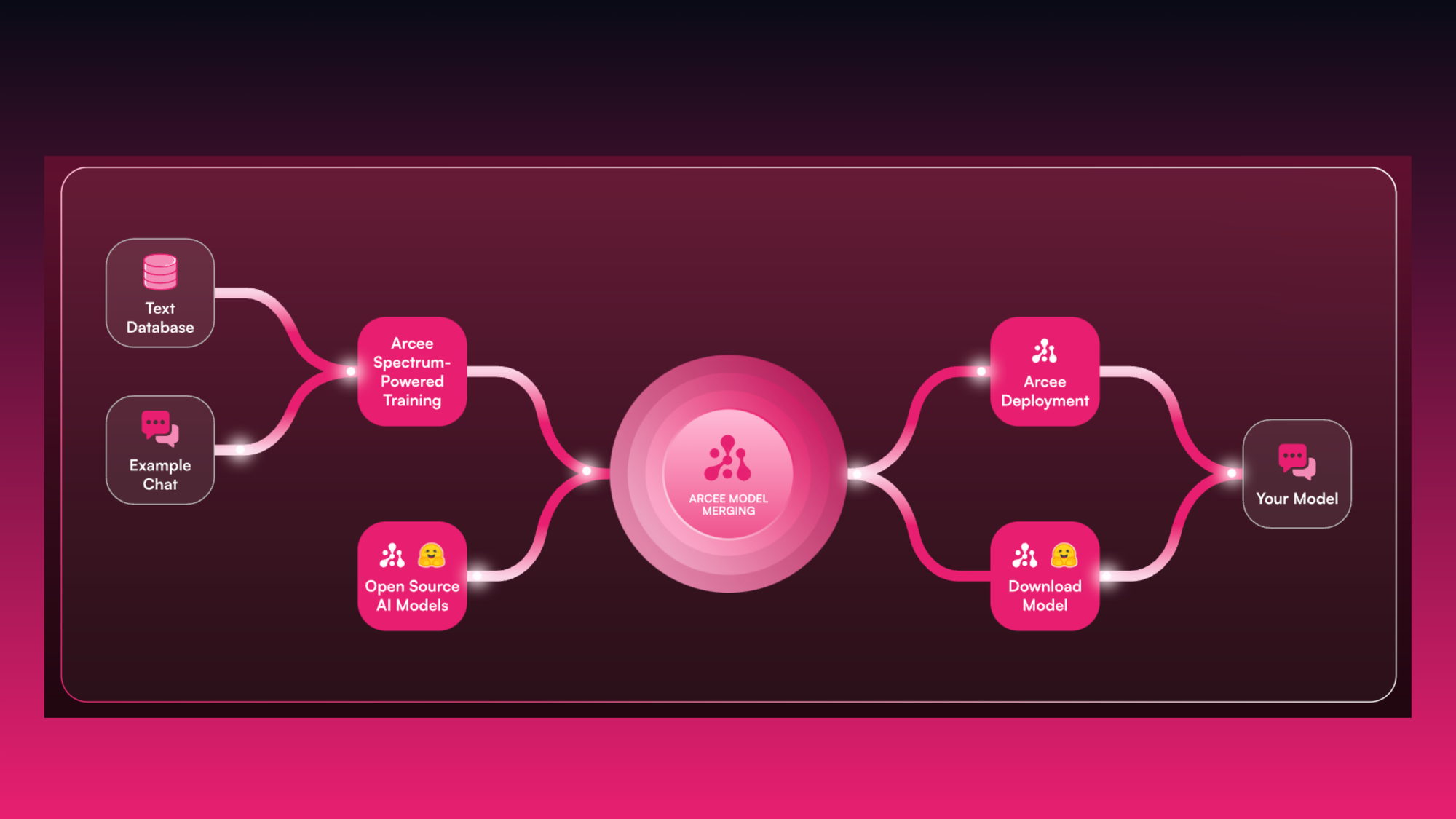

☁️ Third – and let’s face it, this is really the most important – we have released the cloud version of our SLM training-merging-deploying platform. It’s called Arcee Cloud. You can sign in to the free tier directly on the website and you can take a tour here.

Our goal since Day 1 has been to bring domain-specific language models to the masses, and the convenience and accessibility of Arcee Cloud takes us one major step closer to fulfilling that vision.

Finally – we want to hear from you.

As we witness the broader market moving away from the impracticality of #LLMs and towards SLMs, we're giving companies of all sizes the solution they actually need when it comes to GenAI. Tell us about the challenges you're facing and the use cases you want to address, and we'll teach you how our end-to-end platform can give you incredibly performant and efficient models trained on your own data.

We look forward to hearing from you 🙌🏼