How to Merge Llama3 Using MergeKit

... And what do we do at Arcee when an exciting new model drops? We MERGE IT on MergeKit! We walk you through the process and share the initial results.

🦙 Meta just released Llama-3 and its evals are epic!

🚀 Training and Fine-Tuning: Trained on 15T tokens and further refined with 10M human-annotated samples.

🦙 Model Variants: 8B and 70B versions with both Instruct and Base formats (Note: No Mixture of Experts (MoE) versions).

📏 Benchmark Achievement: Llama-3-70B is the best open large language model on MMLU benchmark (> 80% 🤯).

💻 Coding Proficiency: Instruct 8B achieves a 62.2% score and Instruct 70B scores 81.7% on the HumanEval coding benchmark.

✍🏻 Tokenizer and Vocabulary: Utilizes a Tiktoken-based tokenizer with a vocabulary size of 128k.

🪟 Context Window: Features a default context window of 8,192, which can be extended as needed.

📐 Alignment Techniques: Employs SFT, PPO, and DPO for alignment post-training.

✅ Commercial Use: Fully permitted for commercial applications.

🤗 Available on Hugging Face.

Shout out to Philipp Schmid for doing the homework for us!

With the Llama-3 release, what do we want to do at Arcee? Merge it!🚀 Although there are no fine-tuned versions of Llama-3, we want to validate that MergeKit will support merging this great new model. We can experiment and validate by merging the Llama-3 8B Base with the Llama-3 8B Instruct versions!

First, we set up our server to be ready to go for our merge experiments:

apt update

apt install -y vim

git clone https://github.com/arcee-ai/mergekit

python -m pip install --upgrade pip

cd mergekit && pip install -q -e .

pip install -qU transformers huggingface_hub PyYAML

Now, we authenticate with the HF hub:

export HF_TOKEN='YOUR_TOKEN_HERE'

Then, we authenticate with the HuggingFace CLI:

huggingface-cli loginEnter your HF token here again when prompted.

Now, we create our merge config file. We will go with slerp merge method as an example for this experiment.

Let’s create a python file that we can run on our server to create our yaml file:

vi create_yaml.pyFirst, we can create create_yaml.py with the following code:

import yaml

MODEL_NAME = "llama-3-base-instruct-slerp"

yaml_config = """

slices:

- sources:

- model: meta-llama/Meta-Llama-3-8B

layer_range: [0, 32]

- model: meta-llama/Meta-Llama-3-8B-Instruct

layer_range: [0, 32]

merge_method: slerp

base_model: meta-llama/Meta-Llama-3-8B-Instruct

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

"""

print("Writing YAML config to 'config.yaml'...")

try:

with open('config.yaml', 'w', encoding="utf-8") as f:

f.write(yaml_config)

print("File 'config.yaml' written successfully.")

except Exception as e:

print(f"Error writing file: {e}")Now, we can run the above script by executing python create_yaml.py.

Our config file is now created.

We will now run our merge command to perform our merge:

mergekit-yaml config.yaml merge --copy-tokenizer --allow-crimes --out-shard-size 1B --lazy-unpickle --trust-remote-codeEpic, the merge was successful!

But, Arcee has made life easier.

Alternatively, you can use our Mergekit Config Generator UI to generate your merge config. First, enter the name of the models, and their number of layers. Then, select the merge method, as well as the data type (dtype). Finally, click on the “Create config.yaml” button and there you go. The config file is ready!

Next, copy the config file and paste it to our Mergekit GUI. Enter your HF Write Token, and select a name for the merged model. Then, click on the “Merge” button. Now, the merged model is available on your repo. Congratulations!

Now, we want to create our model card in the HF hub:

vi create_model_card.pyPaste in the following code in the python file:

from create_yaml import yaml_config, MODEL_NAME

from huggingface_hub import ModelCard, ModelCardData

from jinja2 import Template

import yaml

username = "arcee-ai"

template_text = """

---

license: apache-2.0

tags:

- merge

- mergekit

{%- for model in models %}

- {{ model }}

{%- endfor %}

---

# {{ model_name }}

{{ model_name }} is a merge of the following models using [mergekit](https://github.com/arcee-ai/mergekit):

{%- for model in models %}

* [{{ model }}](https://huggingface.co/{{ model }})

{%- endfor %}

## 🧩 Configuration

```yaml

{{- yaml_config -}}

```

"""

# Create a Jinja template object

jinja_template = Template(template_text.strip())

# Get list of models from config

data = yaml.safe_load(yaml_config)

if "models" in data:

models = [data["models"][i]["model"] for i in range(len(data["models"])) if "parameters" in data["models"][i]]

elif "parameters" in data:

models = [data["slices"][0]["sources"][i]["model"] for i in range(len(data["slices"][0]["sources"]))]

elif "slices" in data:

models = [data["slices"][i]["sources"][0]["model"] for i in range(len(data["slices"]))]

else:

raise Exception("No models or slices found in yaml config")

# Fill the template

content = jinja_template.render(

model_name=MODEL_NAME,

models=models,

yaml_config=yaml_config,

username=username,

)

# Save the model card

card = ModelCard(content)

card.save('merge/README.md')

card.save('merge/README.md')

Now, to create the model card we will run:

python create_model_card.pyOur model card is now created.

Now, we will push our model to the HF hub.

First, we will create our upload_to_hf.py python script:

vi upload_to_hf.pyPaste in the following code:

from create_yaml import yaml_config, MODEL_NAME

import os

from huggingface_hub import HfApi

username = "arcee-ai"

# Use the environment variable for the API token

api_token = os.getenv("HF_TOKEN")

if api_token is None:

raise ValueError("Hugging Face API token not set. Please set the HF_TOKEN environment variable.")

api = HfApi(token=api_token)

repo_id = f"{username}/{MODEL_NAME}"

# Create a new repository on Hugging Face

api.create_repo(repo_id=repo_id, repo_type="model")

# Upload the contents of the 'merge' folder to the repository

api.upload_folder(repo_id=repo_id, folder_path="merge")Now, run our upload script:

python upload_to_hf.pyNow, we have a merged Llama-3 model in our Hugging Face hub.

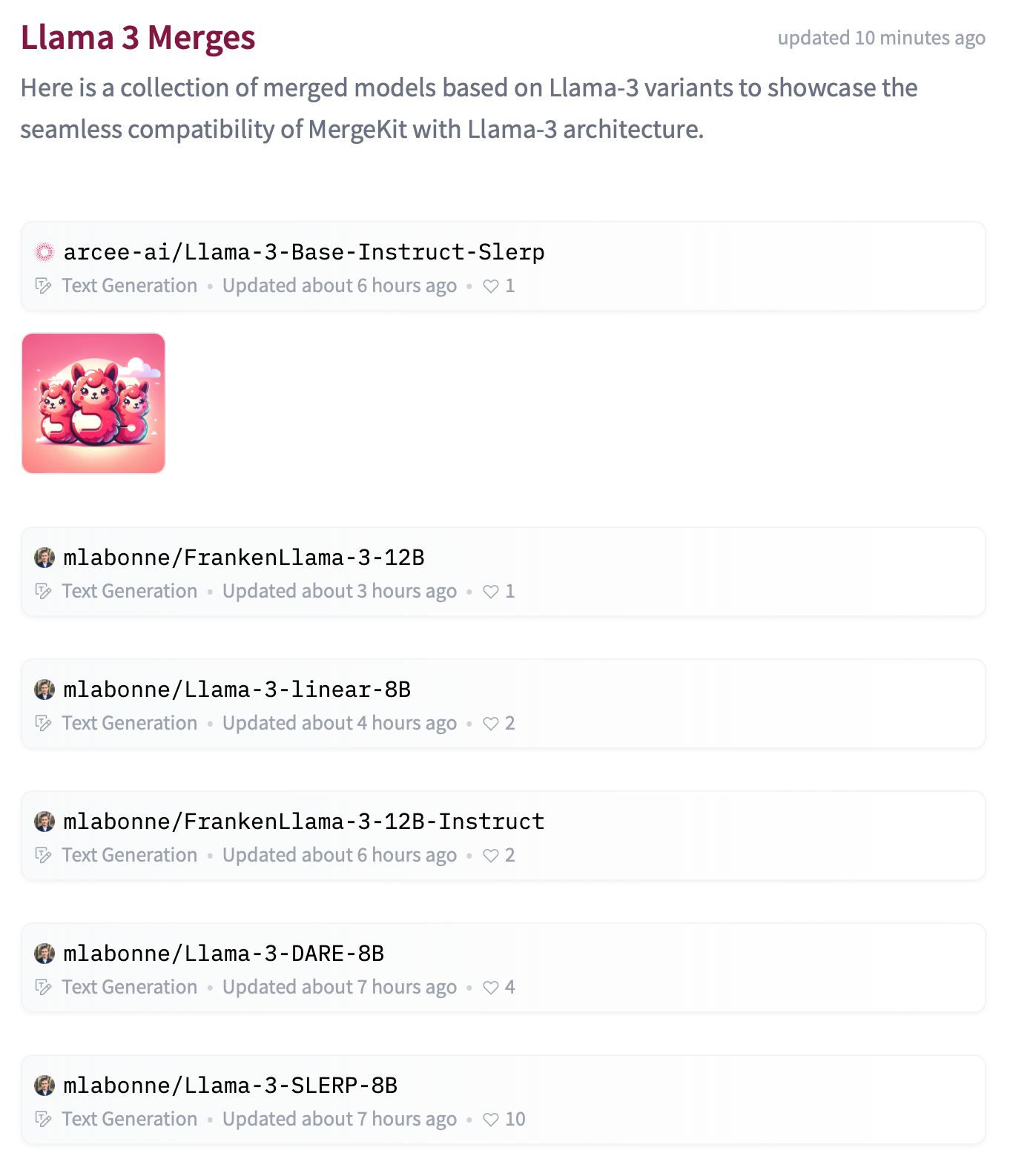

You can follow the same steps to try different merge methods (e.g., Linear, SLERP, DARE-TIES, Passthrough, and Franken merging) available on MergeKit. Here is a collection of merged models using Llama-3 variants:

Our experiment aimed to showcase the seamless compatibility of MergeKit with Llama-3-based model variants. It's time to elevate our game! Start fine-tuning and merging with this phenomenal model to unlock new potentials. Get ready to dive into a world where innovation meets efficiency. Let's merge into the future with Llama-3! 🌟

References

- Goddard, C., Siriwardhana, S., Ehghaghi, M., Meyers, L., Karpukhin, V., Benedict, B., ... & Solawetz, J. (2024). Arcee's MergeKit: A Toolkit for Merging Large Language Models. arXiv preprint arXiv:2403.13257.

- Meta. (2024, April). Introducing Meta Llama 3: the most capable openly available LLM to date. Meta. https://ai.meta.com/blog/meta-llama-3/