Train, Merge, & Domain-Adapt Llama-3.1 with Arcee AI

Get Llama-3.1 but better – customize the OS model for all your needs, using Arcee AI's training, merging, and adaptation techniques and tools. Our team created this guide to get you started.

We are excited to announce Llama-3.1 training and merging support within Arcee Cloud, Arcee Enterprise (VPC), and mergekit.

Llama-3.1's 128K context practically solves many problems for our customers who build domain-adapted Small Language Models (SLMs) but still need the longer context they're accustomed to using with general model APIs.

The community had previously worked on longer-context SLM models, but Llama-3.1 solidifies a good pre-trained standard for us all to work from.

The best part about Llama-3.1 is that you can keep training it!

And as we always recommend, you should undoubtedly merge it.

Continuously Pre-Train Llama-3.1 on Arcee Cloud+VPC

Continuous Pre-Training involves retraining a language model's next token prediction on your proprietary set of text. This allows you to extend Llama's knowledge of tokens within its parameters, not externally, with prompting, reasoning, or Retrieval-Augmented Generation (RAG).

For Llama-3.1, you can Continuously Pre-Train with a smaller context window (e.g., 8K token stacks) and merge back into the extended context window without knowledge transfer loss.

To reduce training time without compromising quality, we scanned LLama-3.1 with Arcee Spectrum and integrated the model into the Arcee Continuous Pre-Training routines.

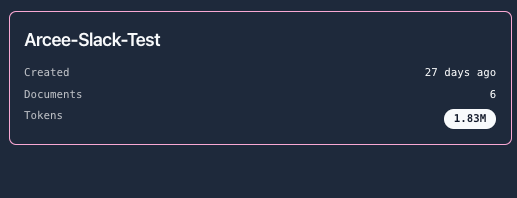

First, I inject a few text tokens from our internal Arcee Slack.

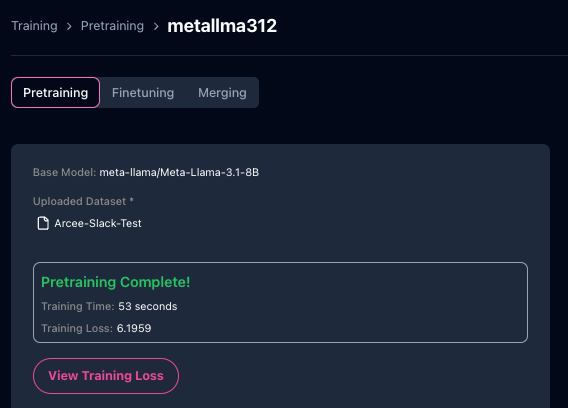

Then, I run Continuous Pre-Training on Llama-3.1-8B.

We can observe knowledge injection of Arcee Slack tokens, even with a small sample set of tokens.

See here for more Arcee Pre-Training docs.

Finetune Llama-3.1 on Arcee Cloud + VPC

You can also fine-tune Llama-3.1 for specific tasks. You may want to do this to create a particular agent routing user queries.

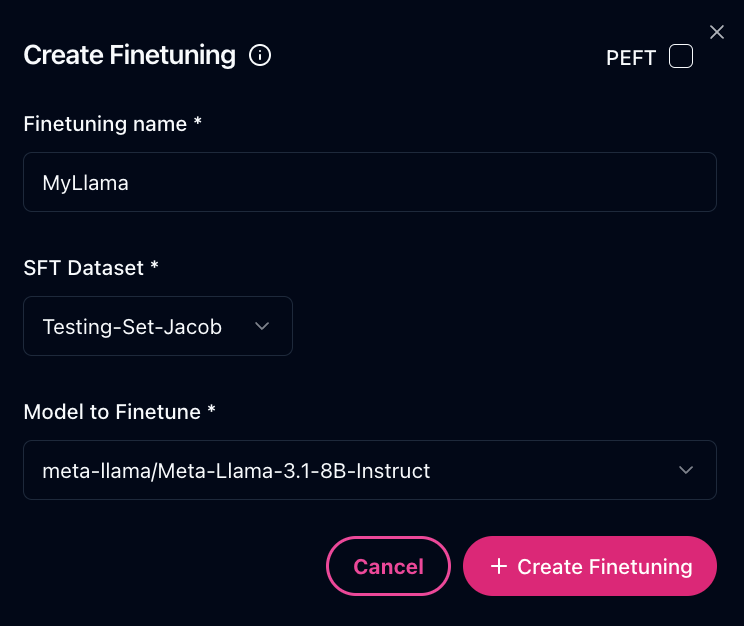

Here, I launch a fine-tuning job on the instruct model.

For more details on usage, see the Arcee AI alignment documentation.

Merging Llama-3.1

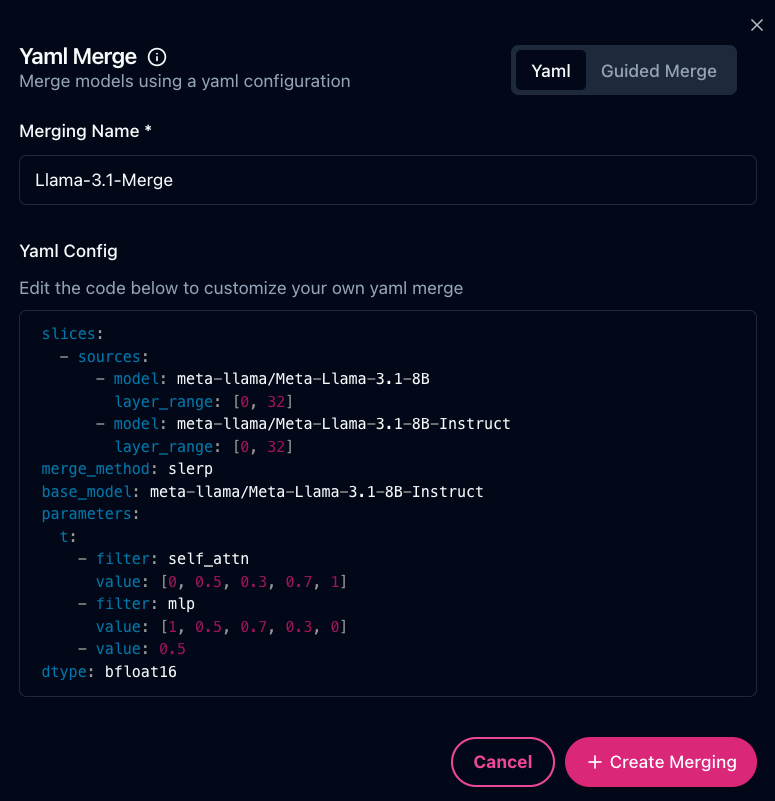

As always at Arcee AI, we recommend that you merge Llama-3.1 after you have trained it. You can merge locally on mergekit or on Arcee Cloud.

Here is a simple SLERP merge that merges the base model back into the instruct model.

slices:

- sources:

- model: meta-llama/Meta-Llama-3.1-8B

layer_range: [0, 32]

- model: meta-llama/Meta-Llama-3.1-8B-Instruct

layer_range: [0, 32]

merge_method: slerp

base_model: meta-llama/Meta-Llama-3.1-8B-Instruct

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16OS Models Closing In On Closed Source

The gap between open source and closed source AI is shrinking every day. Here at Arcee AI, we're thrilled and honored to play a role in this world-changing innovation.

As the open source models become increasingly powerful and performant, the strategy of adapting them for specific domains is quickly becoming an obvious choice.

At Arcee AI, we're committed to providing tools to help you adapt open source AI more efficiently. Now, you can also apply these tools to Llama-3.1 and adapt it to your needs.

If you'd like to try Arcee Cloud, please sign up and read the documentation.

Happy training! And as always, happy merging!