Langchain+Arcee: build domain models with greater flexibility

Combining Arcee’s generators and retrievers with Langchain allows you to build almost any AI application you wish, from using retrievers to fully autonomous systems using chains and agents.

A major flaw of General Language Models is the lack of domain understanding, which leads to greater hallucinations and lack of flexibility for use cases. Here’s where Domain Adaptive Language Models come into play, equipped with understanding of your data combined with extremely accurate generators and retrievers.

Arcee’s models are 100% hosted on your VPC, no rate limits, no data privacy concerns. With Arcee, you own the models and your data.

Combining Arcee’s generators and retrievers with Langchain allows you to build almost any AI application you wish, from using retrievers to fully autonomous systems using chains and agents.

Setup

Setting up Arcee and Langchain involves a simple process. To begin with, you need to install necessary packages using pip. These can be installed by simply typing

pip install -q langchain arcee-py

Signing up for a free account is imperative if you don't already have one. Once you've signed up, you need to set the ARCEE_API_KEY environment variable or pass it directly when creating an instance. You have the choice of exporting the key using export ARCEE_API_KEY='your_api_key_here' or you can choose to include it directly in your script as follows:

from langchain.llms import Arcee

arcee = Arcee(model="DALM-PubMed", arcee_api_key="ARCEE-API-KEY")

For this discussion, DALM-PubMed is utilized. However, you can train your own DALMs!

Train your own DALM

Build your own Domain Adaptive Language Model using either Arcee’s platform, and use the APIs.

Here’s a quick guide on how to train your first DALM!

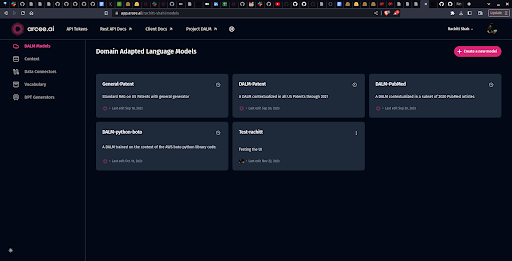

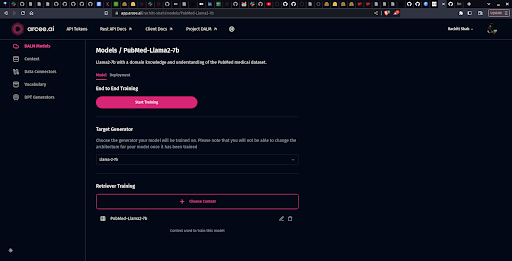

Creating DALMs using our hosted solution

Signup on our platform, and you’ll need to login into your account

You’ll be directed to our hosted platform. To get started with creating your own DALM, click on the “Create a new model” button. You can also opt for an API key to train your model using the arcee-python package!

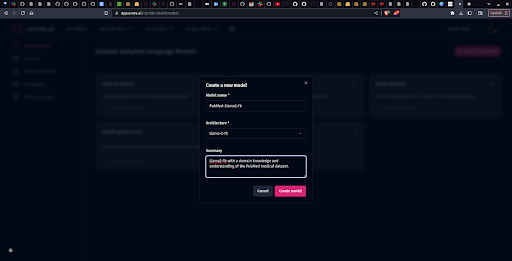

Enter the name of your model, and select your desired architecture. If you’re confused on which model to use for your usage, feel free to reach out to us at sales@arcee.ai

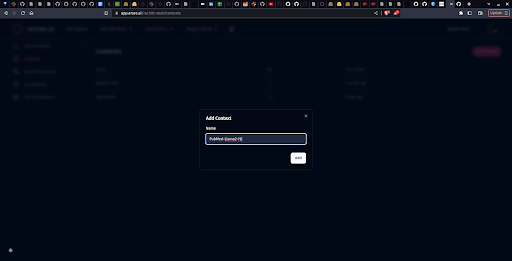

You need to add context for your model for it to perform as expected for your usage. Context is the knowledge base you’re providing for the model.

Retriever training ensures that your retriever also has context of your knowledge base. This approach is documented on our DALM github repo!

Click on add context for your model. You'll be directed to upload context for your retriever and model domain adoption.

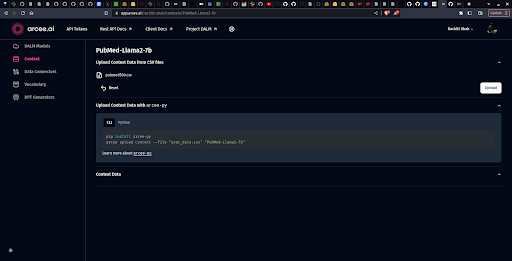

How do we process data? We currently support CSV files, we’re adding support for other files and third party apps coming extremely soon.

Your CSV file must include the following columns:

- title: This column should contain the title of each document in your dataset.

- doc: This column should contain the actual content of each document. Please ensure that your CSV file includes a header row, so Arcee can correctly identify these columns.

You can include additional columns in your CSV file. These will be stored as metadata and can be used during retrieval and generation on your trained models. For example, if you have a category for each document, you can include a category column in your CSV file. Arcee will store this information as metadata.

For this cookbook, we’ll be using the pubmed500 dataset.

Once you've successfully uploaded the CSV, return to the DALM Models page, and click on the name of your model, and select context to start training!

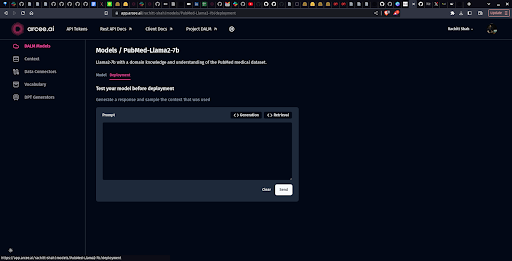

You can use your model by going to the deployments page, and test it out!

Using the arcee-py SDK to create a DALM

For a more hands-on experience, developers can opt to train and interact with their DALM, you can also create a DALM using the arcee-py package, which provides functions for uploading documents, training the model, and generating or retrieving data. Here's a brief look at the code you might use. Please refer to setting up to understand how to use the arcee SDK

Uploading Documents

import arcee

arcee.upload_doc("pubmed", doc_name="doc1", doc_text="sample text")

Training the DALM

import arcee

dalm = arcee.train_dalm("medical_dalm", context="pubmed")

status = arceeimport arcee

dalm = arcee.train_dalm("medical_dalm", context="pubmed")

import arcee

med_dalm = arcee.get_dalm("medical_dalm")

response = med_dalm.generate("Query about Scopolamine?")

Retrieving Documents

import arcee

med_dalm = arcee.get_dalm("medical_dalm")

response = med_dalm.generate("Query about Scopolamine?")

Or, using the Arcee CLI:

Upload Context

arcee upload context pubmed --file doc1

Train your DALM

arcee train medical_dalm --context pubmed

Generate from DALM

arcee generate medical_dalm --query "your query"

Retrieve Documents

arcee retrieve medical_dalm --query "your query"

Arcee & Langchain Configuration

Arcee uses contextual retrievers and generators under the hood when you're trying to use your DALMs.

Using Arcee as a Retriever

The retriever is used to fetch contextual information as a RAG pipeline for your DALM generator model.

If you want to use only Arcee's retrievers, here's how you can use them with a different model generator.

This feature complements our language model by retrieving contextually relevant documents, delivering an even richer content generation process:

from langchain.retrievers import ArceeRetriever\

retriever = ArceeRetriever(model="DALM-PubMed", arcee_api_key="ARCEE-API-KEY")\

documents = retriever.get_relevant_documents(query="Neurobiology", size=5)\

print(documents)

Here's an example of using Arcee's PubMed retriever with OpenAI's GPT-3.5 Turbo:

from langchain.retrievers import ArceeRetriever\

from langchain.chat_models import ChatOpenAI\

from langchain.chains import ConversationalRetrievalChain\

from langchain_core.messages import HumanMessage, SystemMessage

# Create an instance of the ArceeRetriever class\

retriever = ArceeRetriever(model="DALM-PubMed")

# Create an instance of the ChatOpenAI model\

openai_model = ChatOpenAI(model_name="gpt-3.5-turbo", openai_api_key = 'your-OpenAI-key')

# Create the ConversationalRetrievalChain\

chain = ConversationalRetrievalChain.from_llm(openai_model, retriever=retriever)

def custom_chain(question):\

# Retrieve relevant documents using ArceeRetriever\

documents = retriever.get_relevant_documents(question)

# Extract relevant context from the retrieved documents\

context = "\n".join([doc.page_content for doc in documents])

# Create a list of BaseMessage objects\

messages = [SystemMessage(content=context), HumanMessage(content=question)]

# Generate an answer using the OpenAI Chat model\

response = openai_model._generate(messages)\

answer = response.generations[0].message.content

return answer

# Use the custom chain to ask questions and get answers\

question = "Can AI-driven music therapy contribute to the rehabilitation of patients with disorders of consciousness?"\

answer = custom_chain(question)

print(f"Question: {question}")\

print(f"Answer: {answer}")

Using Arcee for Generation

Arcee generates endpoints bundle retrieval and generation as a single function.

from langchain.llms import Arcee

Create an instance of the Arcee class\

arcee = Arcee(

model="DALM-PubMed",

arcee_api_key="ARCEE-API-KEY" if not already set in the environment

)

By integrating Arcee with Langchain, you can now generate text with greater contextual depth, tailored as per domain-specific requirements, allowing you to adjust parameters such as the 'field_name', 'filter_type', and 'value' according to specific needs.

These adjustments permit effective tailorization of your output, delivering greater precision and flexibility:

prompt = "Explore the role of AI in neuroscience."

response = arcee.generate(prompt, size=10)

print(response)

Advanced configurations for Generation and Retrieval

With Arcee, you can customize settings such as API URLs and model arguments, here's how you declare a model for generation using model_kwargs for greater control:

arcee = Arcee(

model="DALM-Patent",

model_kwargs={

"size": 5,

"filters": [

{"field_name": "document", "filter_type": "fuzzy_search", "value": "innovation"}

]

}

)

If you're using the arcee.generate endpoint, there's no need to customize your retrievers. The generator endpoint abstracts the retrieval as well!

Size refers to the number of results returned.

The parameters in this code can be adjusted according to your domain-specific needs.

We're adding support to pass the 'field_name', 'filter_type', and 'value'. Here's a glimpse of upcoming filters.

field_name: Determines the specific document field on which to apply the search filter.filter_type:fuzzy_search: Enables a flexible search, allowing for variations within the target field to match related terms.strict_search: Facilitates precision search, requiring the exact term to be present within the field but not necessarily an exact phrase match.value: Represents the actual term or phrase to search for within the input data or metadata.

Some ideas to build your first DALM using Arcee and Langchain!

- Contextual Chatbots: By combining document retrieval with text generation, chatbots can deliver answers with higher relevance and depth.

- Semantic Search Engines: ArceeRetriever can retrieve relevant documents for any given query, which can be utilized to build search engines with greater contextual awareness and semantic understanding.

- Knowledge Bases: Combining Arcee and ArceeRetriever, organizations can establish knowledge bases that are easier to explore and richer in information.

What's next

Combining domain knowledge and Langchain allows you more flexibility across building LLM applications. Orchestrate your models, chain them together and build agentic apps that perform domain specific tasks.

Build your first DALM today!