Introducing Arcee-VyLinh - A Powerful 3B Parameter Vietnamese Language SLM

Today Arcee AI makes our latest contribution to AI in underserved global languages with the release of a 3B Vietnamese SLM, Arcee-VyLinh.

Xin Chao Vietnam! (Hello, Vietnam!)

In the rapidly evolving landscape of AI, Vietnamese language processing has lagged behind its global counterparts. While large language models (LLMs) have transformed how we interact with AI in English and several other languages, Vietnamese speakers have had to settle for either generic multilingual models or specialized models that compromise on performance.

Today, we're changing that narrative with Arcee-VyLinh, a breakthrough 3B parameter small language model (SLM) that redefines what's possible with Vietnamese language AI. Despite its remarkably compact size, VyLinh demonstrates capabilities that surpass significantly larger models, marking a new chapter in Vietnamese NLP.

Our journey began with a simple yet ambitious goal: to create a Vietnamese language model that could deliver state-of-the-art performance without requiring massive computational resources. We believed that with the right training approach, we could push the boundaries of what's possible with a 3B parameter model.

Use Cases

Before we dive into how we trained Arcee-VyLinh, we'd like to highlight some of the many practical use cases of the model. The advanced Vietnamese language capabilities make it versatile for both enterprise and personal applications.

In business settings, it excels at customer service automation, content creation, and document processing. Educational institutions can leverage it for language learning and academic writing support.

For developers and researchers, it serves as a powerful tool for building Vietnamese-language applications, from chatbots to content moderation systems.

Finally, the quantized version brings these capabilities to personal users, enabling local deployment for translation assistance, writing support, and creative projects—all while maintaining high performance on consumer hardware.

Training Details

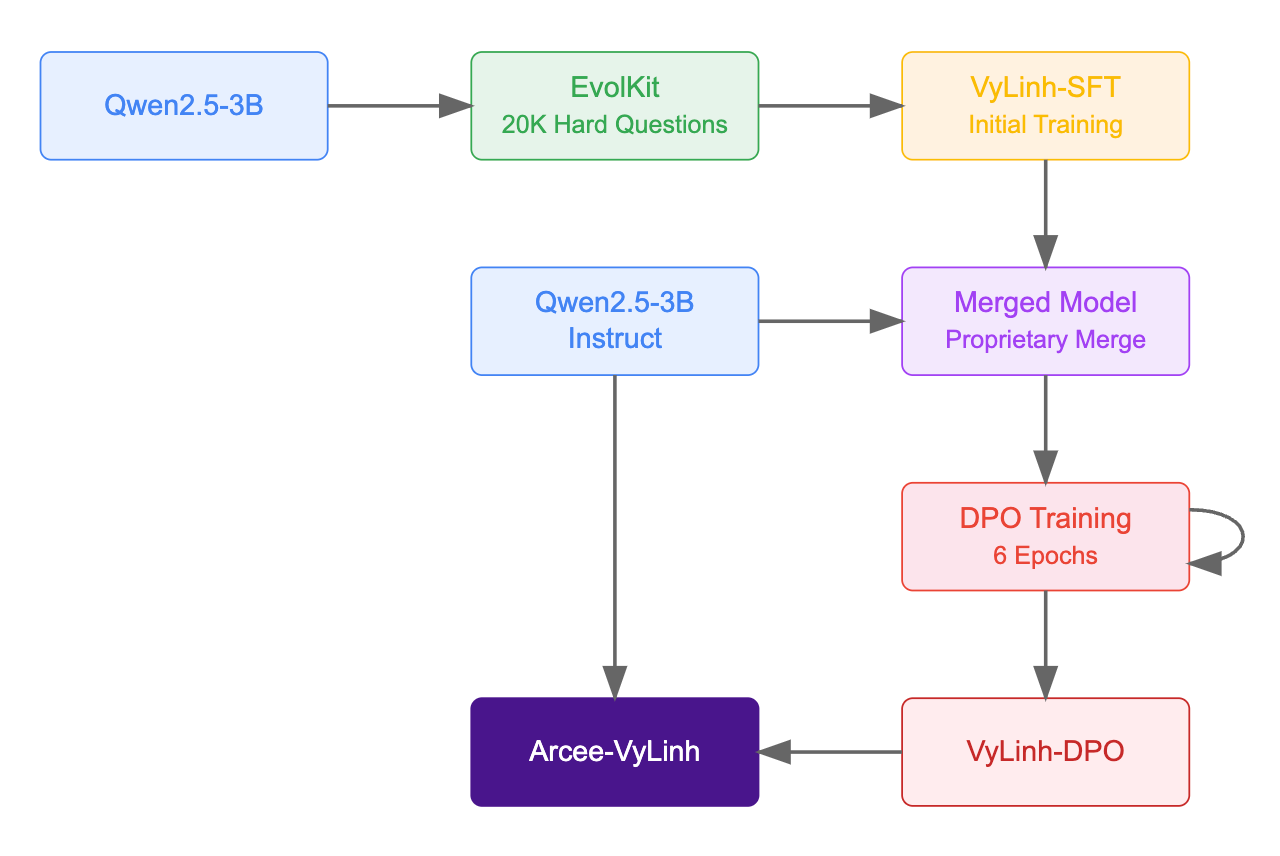

Arcee-VyLinh's development followed a multi-stage training process designed to maximize Vietnamese language capabilities while maintaining efficiency with only 3B parameters.

Foundation and Evolution

We started with Qwen2.5-3B as our base model, chosen for its strong multilingual capabilities and efficient architecture. Using our EvolKit, we generated 20,000 challenging questions specifically designed to test and improve Vietnamese language understanding. These questions were answered by Qwen2.5-72B-Instruct to create a high-quality training dataset, ensuring that our smaller model learns from the best possible examples.

Initial Training and Model Merging

The evolved dataset was used to train VyLinh-SFT (Supervised Fine-Tuning), creating our initial Vietnamese language model. We then employed our proprietary merging technique to combine VyLinh-SFT with Qwen2.5-3B-Instruct, which enhanced the instruction-following capabilities while preserving Vietnamese language understanding.

DPO Training and Final Refinement

The merged model underwent Direct Preference Optimization (DPO) training using ORPO-Mix-40K, translated to Vietnamese. What makes our approach unique is the iterative nature of this phase:

- We ran DPO for 6 epochs

- Each epoch produced an improved model

- The improvements were iteratively reinforced through our self-improvement loop.

The resulting VyLinh-DPO was then merged one final time with Qwen2.5-3B-Instruct, producing Arcee-VyLinh - a model that combines robust Vietnamese language capabilities with strong instruction-following abilities.

Benchmarks

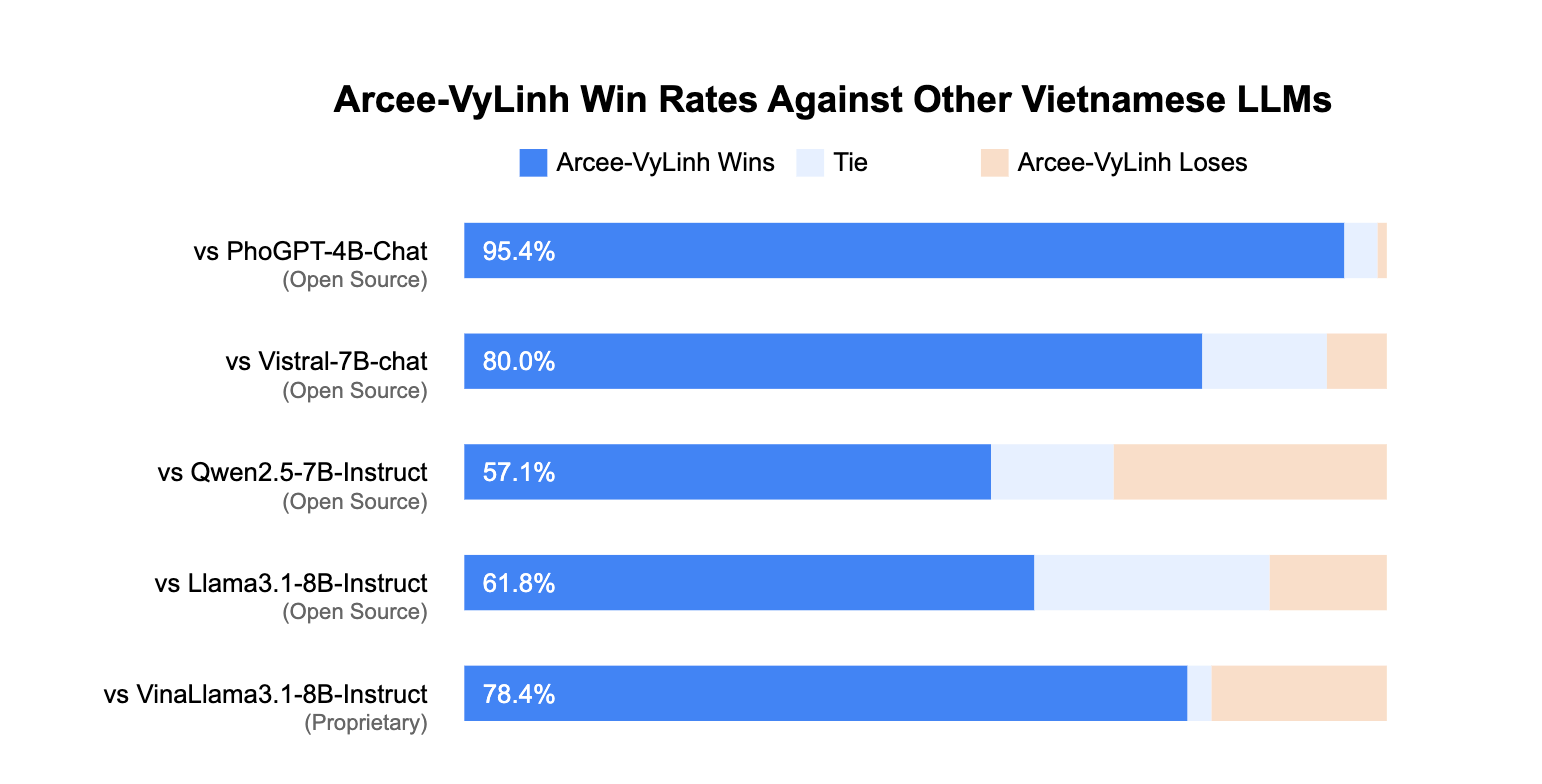

To evaluate Arcee-VyLinh's capabilities, we conducted comprehensive testing using the Vietnamese subset of m-ArenaHard, provided by CohereForAI. This benchmark is particularly challenging as it contains complex questions specifically designed to test the limits of language models.

Our evaluation methodology was straightforward but rigorous: models were paired to answer the same questions, with Claude 3.5 Sonnet serving as an impartial judge to determine the winner of each comparison. The results were remarkable.

Performance Analysis

Arcee-VyLinh demonstrated exceptional capabilities against both open-source and proprietary models:

- Achieved a 95.4% win rate against PhoGPT-4B-Chat

- Dominated with an 80% win rate against Vistral-7B-chat

- Secured a strong 57.1% win rate against Qwen2.5-7B-Instruct

- Maintained a solid 61.8% win rate against Llama3.1-8B-Instruct

- Performed impressively with a 78.4% win rate against VinaLlama3.1-8B-Instruct.

What makes these results particularly noteworthy is that Arcee-VyLinh achieves these win rates with just 3B parameters, significantly fewer than its competitors which range from 4B to 8B parameters. This demonstrates the effectiveness of our training methodology, particularly the combination of evolved hard questions and iterative DPO training.

Key Insights

The benchmark results reveal several important points:

- Our innovative training approach allows a 3B parameter model to outperform larger models consistently.

- The quality of training data and methodology can significantly outweigh parameter count.

- The combination of EvolKit-generated questions and iterative DPO training creates a remarkably strong model.

Try Arcee-VyLinh Today

We're excited to make Arcee-VyLinh freely available to the Vietnamese AI community. Our model represents a significant step forward in Vietnamese language processing, proving that efficient, powerful language models are possible with thoughtful architecture and training approaches.

Download and Usage

You can access Arcee-VyLinh in two versions:

- Full Model

- Available at: arcee-ai/Arcee-VyLinh

- Recommended for enterprise and research applications

- Full 3B parameter implementation

- Optimal for scenarios requiring maximum accuracy.

- Quantized Version

- Available at: arcee-ai/Arcee-VyLinh-GGUF

- Optimized for deployment on consumer hardware

- Maintains strong performance while reducing resource requirements

- Perfect for local deployment and personal use.

We believe in the power of community-driven development and welcome your feedback and contributions. Try out the model, share your experiences, and help us continue improving AI in Vietnamese and other underserved global languages.

Learn More About Arcee AI

Arcee AI is a pioneer in the development of Small Langauge Models (SLMs) for businesses around the globe, and we are proud to contribute to the advancement of non-English AI models. To learn more about our off-the-shelf and custom SLMs, reach out to our team today!