The Power of Non-English LLMs: Meet our Arabic model, Arcee-Meraj (معراج)

We've taken our groundbreaking general-purpose model, Arcee Nova, and enhanced it for Arabic – leading to an Arabic-language LLM that's enterprise-ready, with unprecedented text-generation and comprehension capabilities.

Hot on the heels of our recent releases, today marks another landmark for Arcee AI as we unveil Arcee-Meraj — a highly refined iteration of our previous model, Arcee Nova, specifically fine-tuned for Arabic language applications. Arcee-Meraj is evaluated using the robust benchmarks of the Open Arabic LLM Leaderboard (OALL), where it not only excels but also sets new standards for Arabic language models.

Arabic is more than just a language; it connects more than 400 million people across 20+ countries, from the vibrant streets of Cairo to the historic corners of Baghdad. With a variety of dialects like Egyptian, Levantine, Maghrebi, and Gulf, each area brings its own flavor to the language. Arcee-Meraj aims to bridge gaps and opens up new opportunities, showing the world the incredible potential of language-specific large language models. This release isn't just about technology —it's about embracing every culture and making sure no one's left behind in the digital age.

Background

Arcee-Meraj is the Arabic-enhanced version of Arcee Nova, which itself was a groundbreaking general-purpose model that approached the performance level of GPT-4 as of May 2023. By further fine-tuning Arcee Nova specifically for Arabic nuances, Arcee-Meraj offers unprecedented capabilities in understanding and generating Arabic text at an enterprise level.

Performance

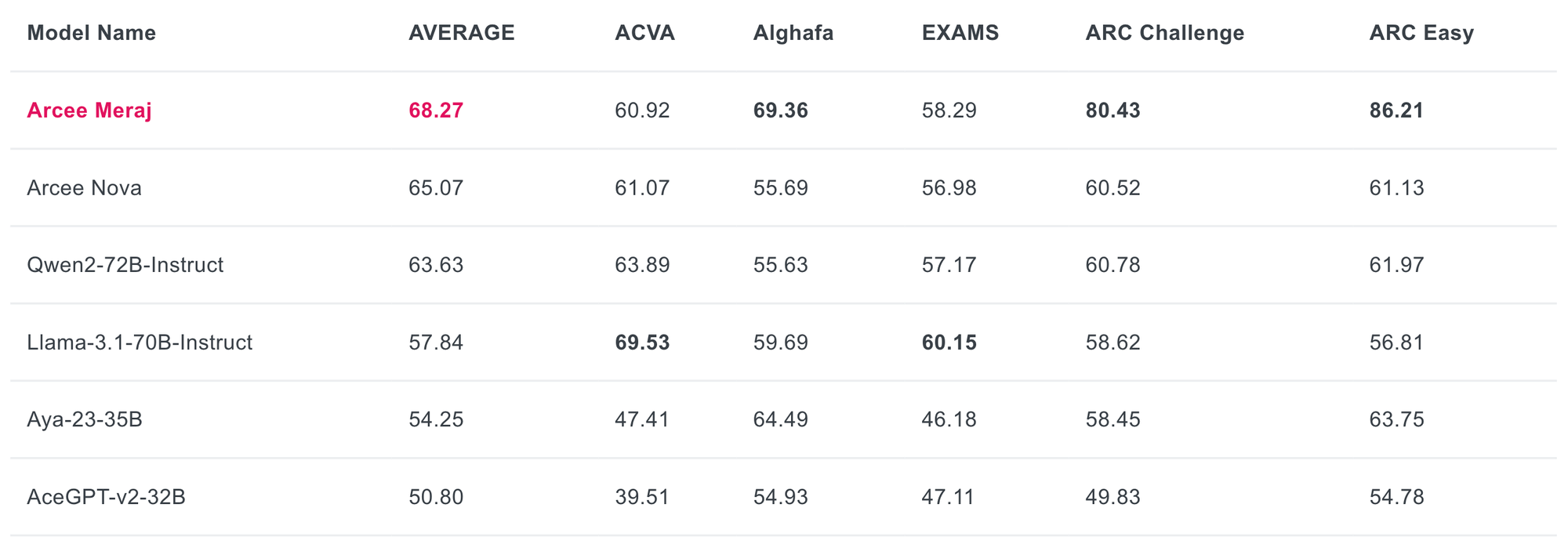

- Evaluated on the Open Arabic LLM Leaderboard (OALL) benchmarks

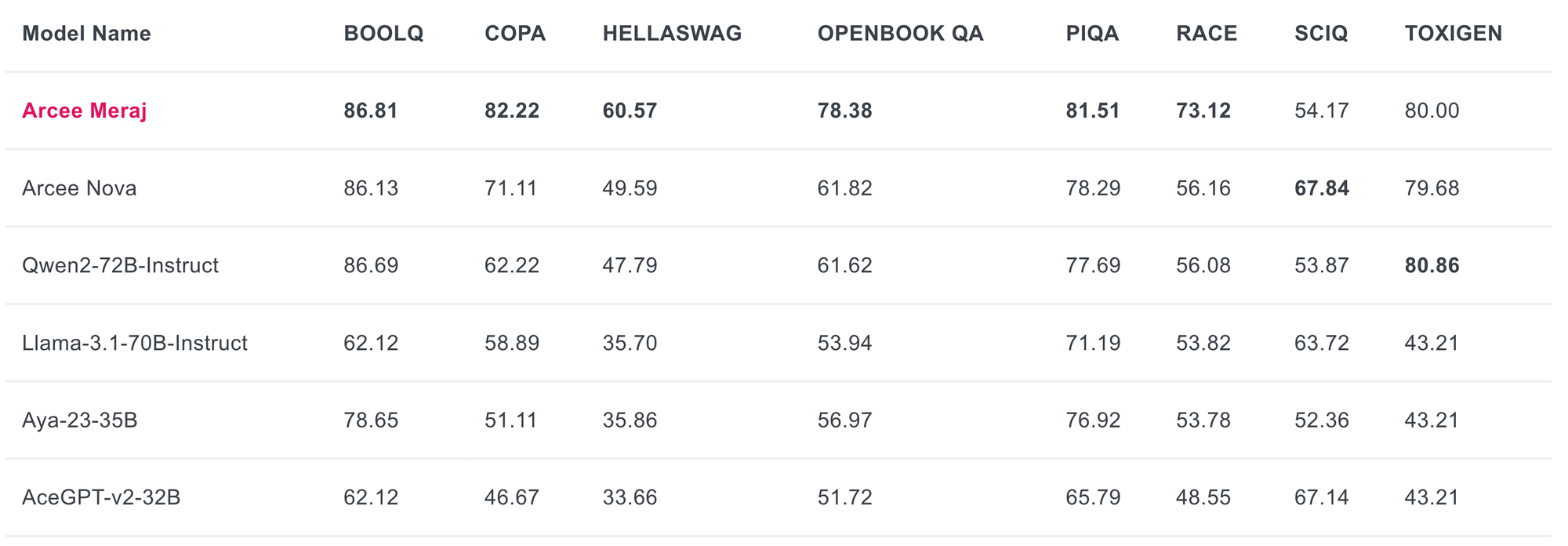

- Evaluated on the English General benchmarks

- Leading enterprise model in the field

- Surpassing both state-of-the-art multilingual and Arabic-specific LLMs.

Advancing the development of LLMs in Arabic and other non-English language is a top goal for Arcee AI not just for the cultural and academic value of these tools, but also for the numerous business applications – including diverse Customer Support, Content Creation, Coding, and Education use cases.

Technical Details

- Base Model: Arcee Nova, known for its integration of Qwen2-72B-Instruct with a custom generalist dataset, further enhanced with Reinforcement Learning from Human Feedback (RLHF).

- Dataset Selection: Our approach involves meticulously collecting mixture of high-quality datasets in both English and Arabic, ensuring a broad and deep linguistic representation that enhances the model's understanding and generative capabilities.

- Batch Translation: To facilitate the seamless integration of English data into our Arabic model, we employ a cost-effective batch translation process that ensures rapid and accurate translation, preserving the integrity and subtlety of the original datasets.

- Task-Specific Cleaning: We apply targeted cleaning protocols for post-training tasks such as Direct Preference Optimization (DPO), enhancing the cleanliness and compatibility of the data for specific tasks.

- Self-curation: We applied self-curation method to select high-quality examples from the open-source examples. Our curation criteria focused on educational values generated by our base model, Arcee Nova.

- Iterative Training and Post-Training: Arcee-Meraj undergoes iterative trainings and post-trainings, structured to verify and ensure that the model performs effectively across a variety of applications, maintaining high standards of accuracy and reliability.

- Model Merging: The final stage involves merging highly performant checkpoints from various training phases. This strategic merging process balances the model's performance across diverse Arabic benchmarks, ensuring robustness and adaptability in real-world applications.

Performance Metrics

Open Arabic LLM (OALL) Benchmarks:

Arcee-Meraj consistently outperforms the state-of-the-art models across most OALL benchmarks, showcasing remarkable improvements and securing top performance on average.

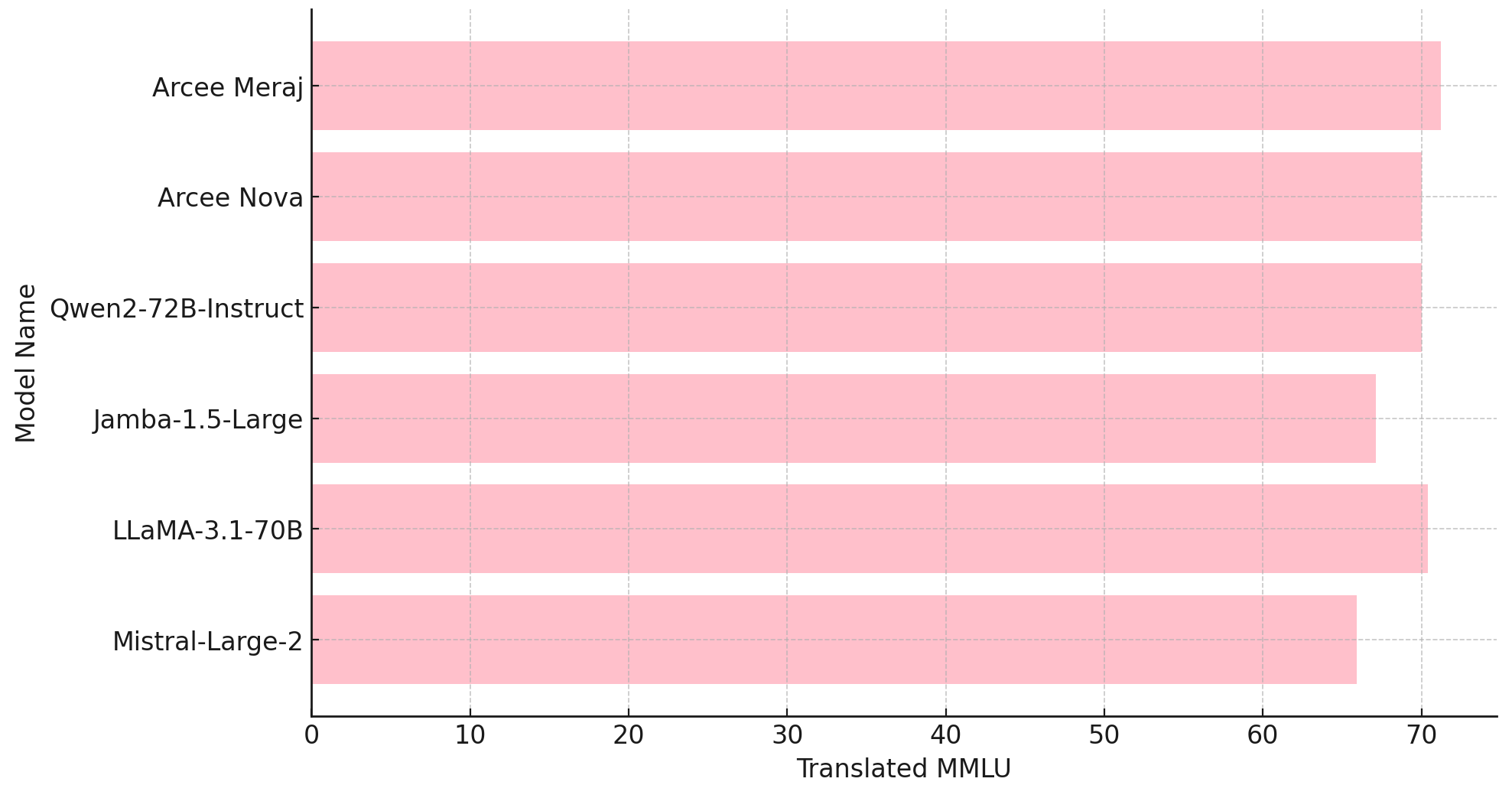

Translated MMLU:

We focused on the multilingual MMLU dataset, as distributed through the LM Evaluation Harness repository, to directly compare Arcee-Meraj with Jamba-1.5-Large, since it's the key metric highlighted in their paper to showcase multilingual strength. Arcee-Meraj outperformed not only Jamba-1.5-Large but also LLaMA-3.1-70B and Mistral-Large-2, setting new standards for Arabic language capabilities. These results demonstrate Arcee-Meraj's superior performance, proving it excels where it truly matters.

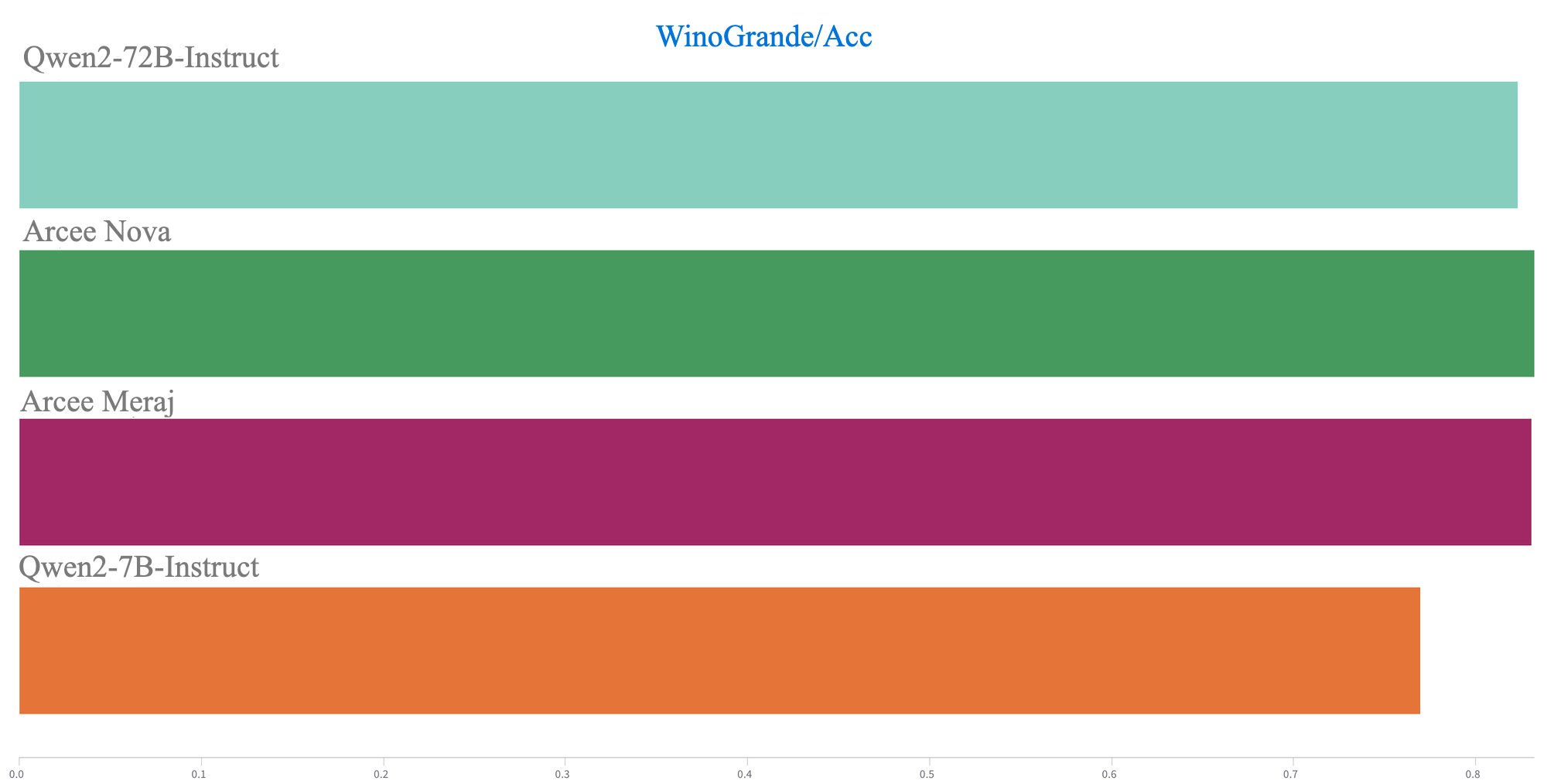

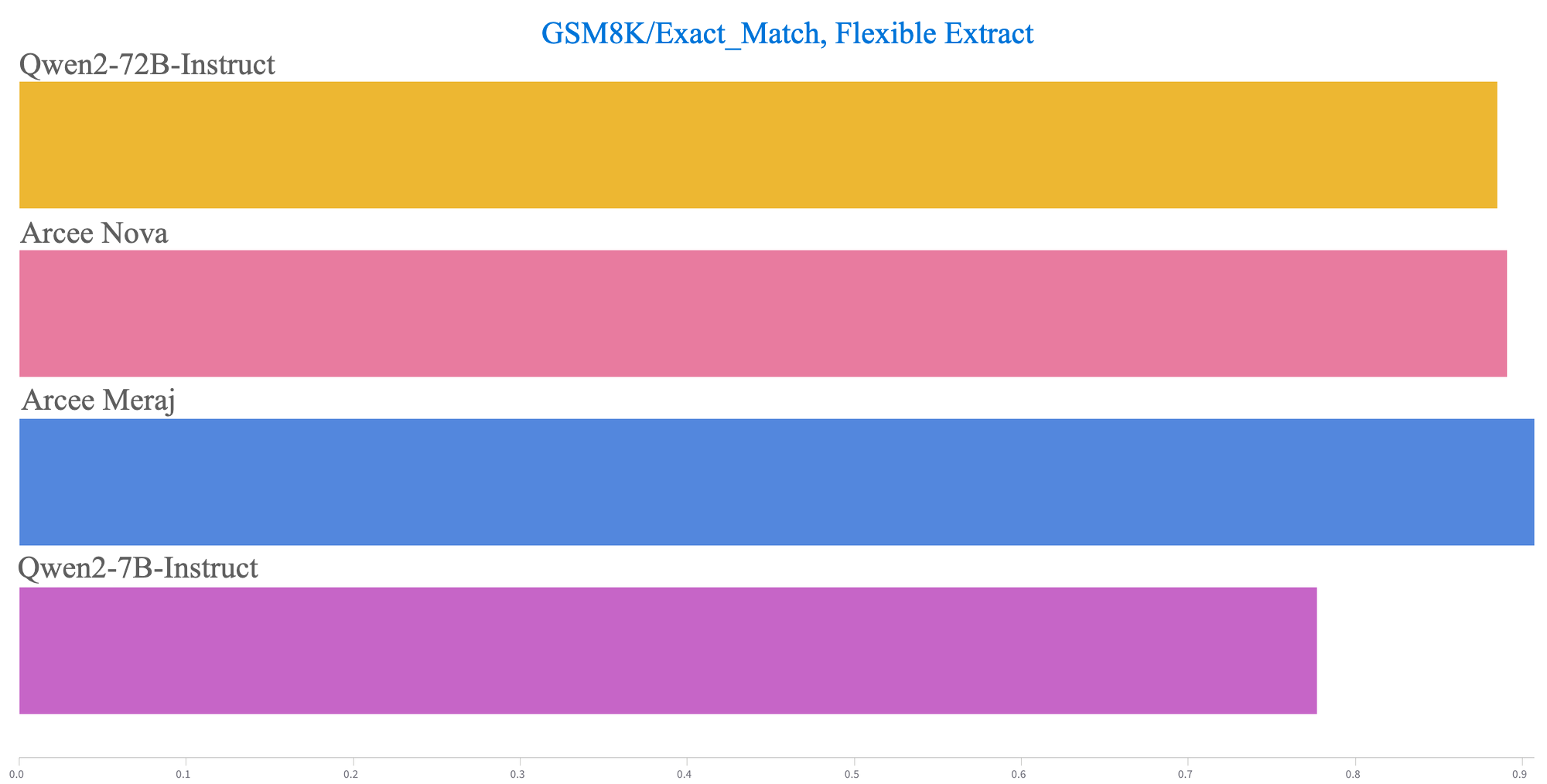

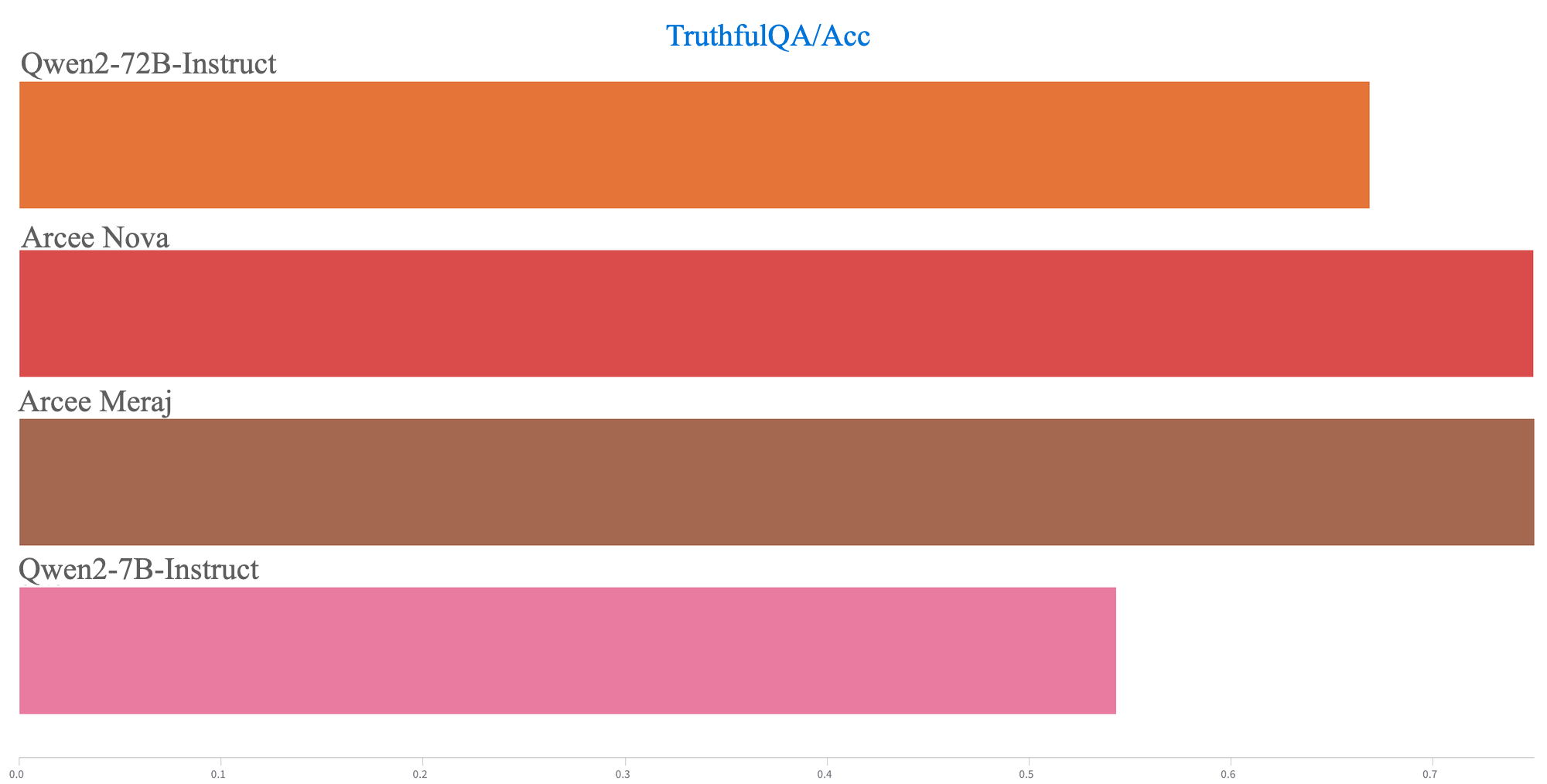

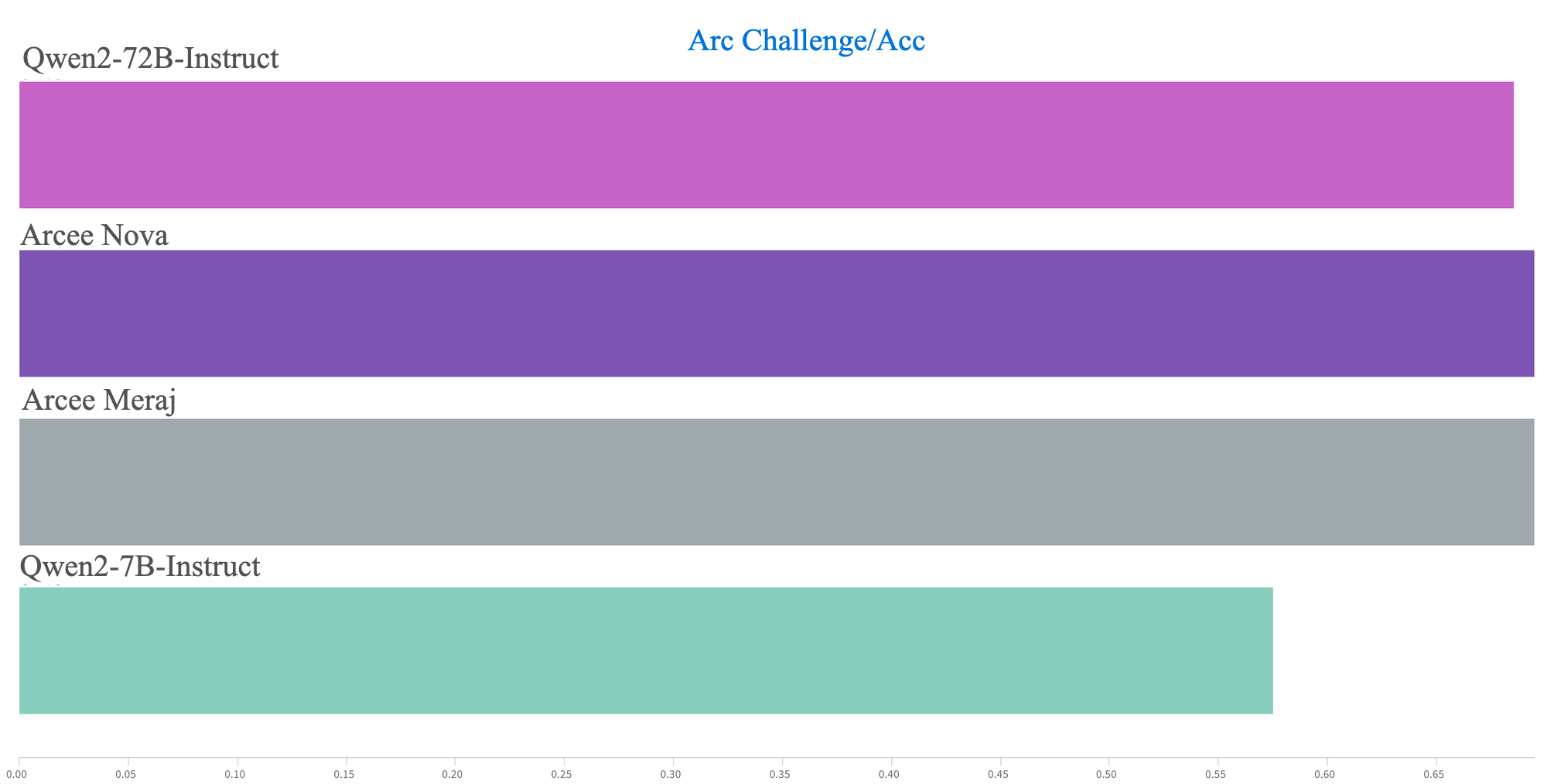

English Benchmarks:

Arcee-Meraj delivers outstanding bilingual performance, showing impressive performance in both Arabic and English. Through further testing with English benchmarks like WinoGrande, GSM8K, TruthfulQA, and ARC Challenge (link), it has demonstrated superior skills in reasoning, problem-solving, and knowledge understanding. These results highlight how Arcee-Meraj maintained its English language capabilities compared to its base models, redefining what’s possible in bilingual AI.

Key Capabilities

- Arabic Language Understanding: High capabilities in general language understanding, reading comprehension, and common-sense reasoning in Arabic.

- Cultural Adaptation: Content creation that is not only linguistically accurate but also culturally sensitive, ensuring that the material resonates with and respects Arabic cultural norms and values.

- Education: Personalized and adaptive learning solutions tailored to Arabic speakers, providing high-quality educational content generation to enhance learning experiences in various subjects.

- Mathematics and Coding: Support for mathematical reasoning and problem-solving, alongside code generation capabilities. This offers valuable resources for developers and technical professionals working with or in Arabic.

- Customer Service: Development of sophisticated Arabic-speaking chatbots and virtual assistants designed to handle customer inquiries and provide support with a high level of accuracy and natural language understanding.

- Content Creation: Generation of high-quality Arabic content tailored for various purposes, including marketing materials, technical documentation, creative writing, and more, ensuring effective communication and engagement in the Arabic language.

Demo

Here is the link to the Chat Interface: https://meraj.arcee.ai/

Landing Page with Sample Prompts

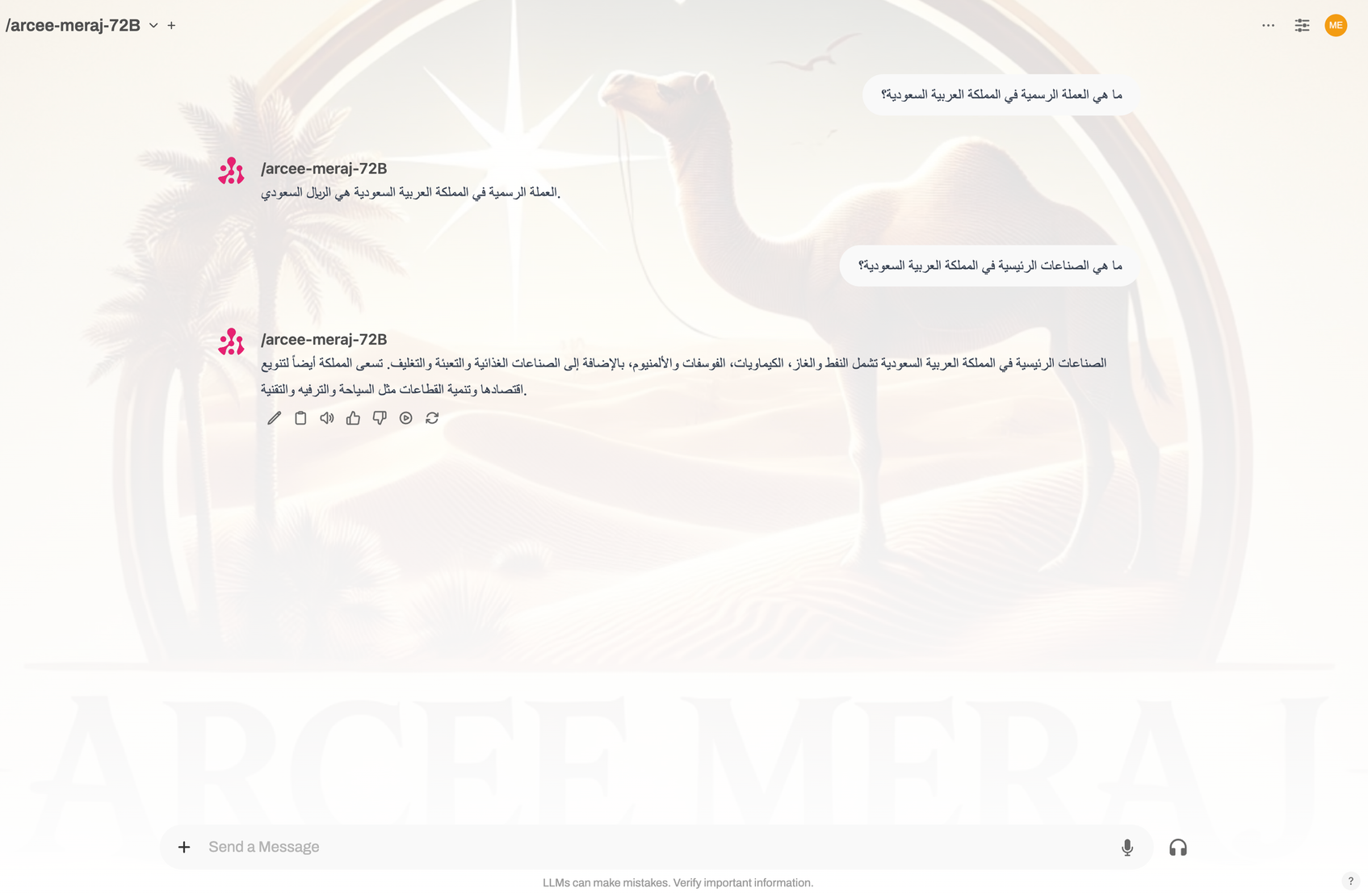

Economics

English Translation:

User: What is the official currency in Saudi Arabia?

Assistant: The official currency of the Kingdom of Saudi Arabia is the Saudi Riyal.

User: What are the main industries in Saudi Arabia?

Assistant: Saudi Arabia's main industries include oil and gas, chemicals, phosphates and aluminum, as well as food and packaging. The Kingdom is also seeking to diversify its economy and develop sectors such as tourism, entertainment and technology.

History

English Translation:

User: How many Rightly Guided Caliphs were there? Answer the previous question.

Assistant: The number of Rightly Guided Caliphs is four.

User: What are their names?

Assistant: The names of the Rightly Guided Caliphs are: Abu Bakr Al-Siddiq, Omar bin Al-Khattab, Othman bin Affan, and Ali bin Abi Talib.

User: What are the criteria on the basis of which the Rightly Guided Caliphs were chosen?

Assistant: The Rightly-Guided Caliphs were chosen based on several criteria, including: high moral values, justice, wisdom, knowledge, and strong faith. Their selection was often based on the recommendation of the previous Caliph.

Creative Writing

English Translation:

User: Tell a magical story about fairy tales.

Assistant: In a faraway land called Dreamland, there was a huge castle that glowed at night like the most beautiful moon. The castle was inhabited by a princess named Nora. But she was no ordinary princess, she had a special power: the ability to turn written words into real things.

One day, an evil wizard arrived and kidnapped the castle, leaving the princess in darkness. But Nora did not lose hope. She used her powers and wrote a story about a brave hero who came to save her. And indeed, the hero appeared. He was a good young man, but he needed guidance. So Nora wrote the hero's journey and how he faced dangers.

Thanks to the magical power of writing, the events took root in reality. The hero defeated the wizard and the light returned to the castle again. Princess Nora decided to use her powers to serve good and preserve the peace of Dreamland. And the hero and the princess lived happily ever after. Isn't that a wonderful magical story?

Mathematics

English Translation:

User: Is there a general rule for expanding binary expressions, like

(a + b)^2? Take a deep breath, think step by step, and give a precise answer.

Assitant: The general rule for expanding the binary expression(a + b)^2comes from the square root rule or what is known as the penumbra rule according to Newton's penumbra principle. The rule is applied as follows:(a + b)^2 = a^2 + 2ab + b^2, and this comes from the fact that(a + b) (a + b) = a*a + a*b + b*a + b*b = a^2 + 2ab + b^2.

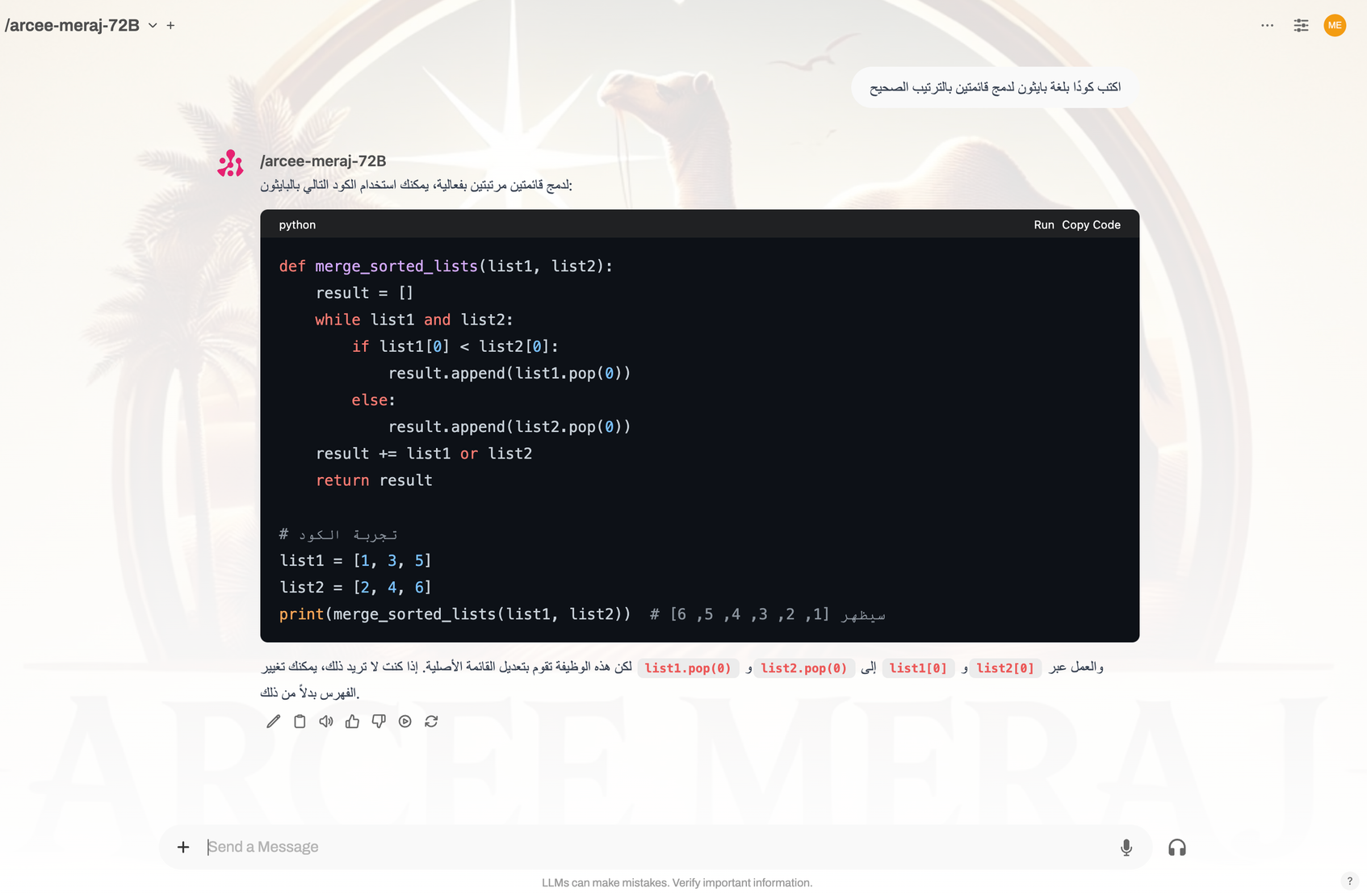

Coding

English Translation:

User: Write a Python code to merge two lists in the correct order.

Assistant: To efficiently merge two sorted lists, you can use the following code in Python:

Code snippet is shown in the picture

This function modifies the original list. If you don't want that, you can changelist1.pop(0)andlist2.pop(0)tolist1[0]andlist2[0]and work through the index instead.

Acknowledgments

We are grateful to the open source AI community for their continuous contributions and to the Qwen team for their foundational efforts on Qwen2-72B.

Looking Ahead

We invite researchers, developers, and businesses to explore the capabilities of Arcee-Meraj, with a particular focus on enhancing Arabic language support. Arabic, a rich and diverse language spoken by millions, presents unique challenges and opportunities due to its diverse dialects and cultural nuances. Our goal is to delve deep into these aspects to create more accurate and culturally attuned models. To achieve this, we seek active collaboration and feedback from the community. Your insights and contributions are vital in helping us develop more refined and effective Arabic language models for future versions. Join us in this endeavor to advance Arabic computational linguistics and ensure its prominent place in the multilingual AI landscape.

Authors' Note

Meraj is a collaborative effort by Arcee AI's Malikeh Ehghagi*, Maziyar Panahi*, Shahrzad Sayehban*, Jacob Solawetz, Mark McQuade, and Lucas Atkins.

(*Equal contributors.)

Learn More About Arcee AI's Models & Model Training Platform

Do you have a business use case for an #LLM in a non-English language? If so get in touch and we can tell you about how Meraj and our other non-English models are earning immediate ROI for our customers. You can schedule a meeting here.