Arcee and mergekit unite

Several months ago, I stumbled upon an innovative technique in the world of language model training known as Model Merging. This SOTA approach involves the fusion of two or more LLMs into a singular, cohesive model, presenting a novel and experimental method for creating sophisticated models at a fraction of the cost, without the need for heavy training and GPU resources. I was immediately drawn to its potential to revolutionize the landscape of language model development.

Model Merging will transform the world of LLM's as we know it

As our team dug deeper into the intricacies of Model Merging, witnessing the surge of merged models on the Hugging Face leaderboard, we recognized an opportunity. We became convinced that Model Merging would emerge as the next significant breakthrough in the field of LLMs. Merging not only showcased effectiveness in generating state-of-the-art models but also promised to unlock unprecedented efficiency in an industry dominated by consistently large and resource-intensive training processes. Recognizing the significance of this transformative capability, our research team quickly moved to integrate model merging methods into the Arcee platform.

mergekit

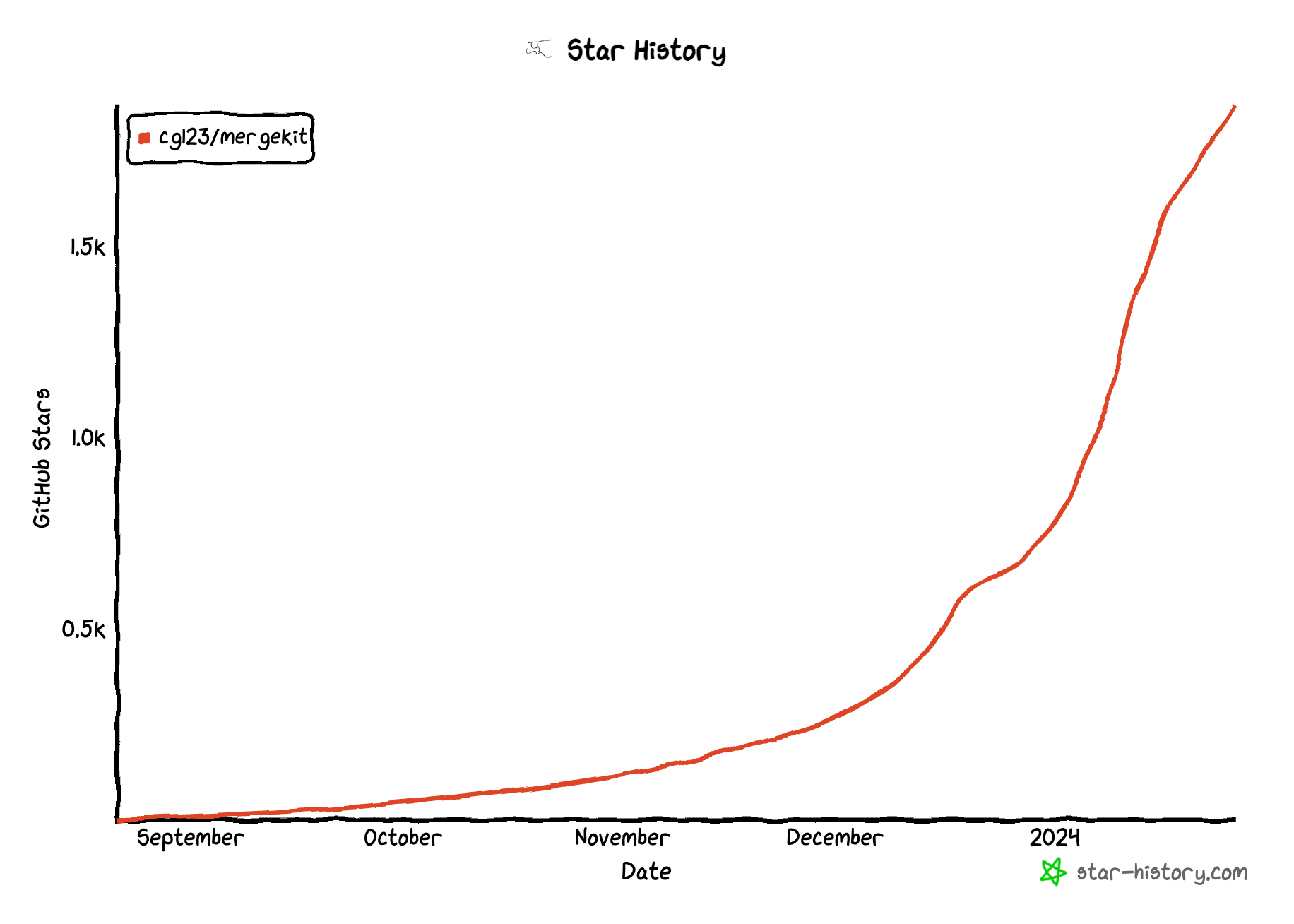

In parallel, just within the last few months, a library named mergekit emerged and shot into popularity among LLM developers.

Positioned as a toolkit designed for the fusion of pre-trained language models, mergekit adopts an out-of-core approach to execute intricate merges even within resource-constrained environments. Notably, mergekit enables merges to be executed solely on the CPU or, if desired, can be accelerated with as little as 8 GB of VRAM.

The creator of mergekit, Charles Goddard, is an award winning software engineer with a strong track record in pushing the boundaries of technological innovation at NASA and Apple. Charles has built something very special in mergekit, with a focus on ease of use, efficiency and innovation.

mergekit and Arcee.ai are...merging!

In a significant advancement, Charles Goddard has officially joined the Arcee team, bringing with him the mergekit library.

Here are a few words from Charles:

I'm delighted to be joining the Arcee team. I started building mergekit as a platform for experimentation that anyone can use, even with low-end hardware. I believe (and the recent flood of amazing results show) that the potential of model merging has only just begun to be tapped. With the support of the fantastic team of researchers and engineers at Arcee, I'm looking forward to continuing to build mergekit into a world-class open source library for merging.

This strategic alliance marks a significant milestone for both Arcee and Charles, as we combine expertise and resources to further innovate and advance in the field of model merging. Furthermore, the integration of the mergekit library into the Arcee platform promises to elevate our capabilities and deliver even more impactful, novel solutions in the world of language models and model merging.

Consider an organization that would traditionally incur potentially hundreds of thousands of dollars in expenses for training an extensive, large language model either from scratch or through continual pre-training of an existing model. With the introduction of the mergekit library, this organization only needs to train a model to learn the organization's private data and not general intelligence/instruction following capabilities. This approach yields comparable results to the extensive process of starting from scratch (or continually pre-training a large model), all achieved in much more energy efficient manner, with a substantially reduced cost.

Arcee will be delivering that precise capability, not only through the mergekit repo, but through its SLM Adaptation system and domain adaptation kit. This is going to change the landscape of LLM training as we know it.

Why does this matter within an SLM system

Merging domain adapted model weights with general open source models affords AI engineers a cost-effective method to extend general intelligence to the needs of their organizations. General capabilities like how to respond properly or facts of wikipedia should not need to be relearned, whereas the complex concepts within an organization should be tailored and held as intellectual property.

In many cases, training, merging, and deploying a domain adapted model is an endeavor that engineering teams need to carefully manage within the requirements of their security mandate. At Arcee we are building routines that help you create the artifacts that are used within mergekit.

The future of mergekit

We are unwavering in our commitment to maintaining the mergekit library as an open-source powerhouse and will be striving to solidify its position as the best library in the world for merging models. All mergekit functionality will remain open source and utilized by the greater AI community as we extend the boundaries of general model capabilities.

With Charles now part of Arcee and backed by the full strength of Arcee's engineers and researchers, we are poised to amplify and enhance the already exceptional capabilities of the mergekit library. Our collective dedication will ensure mergekit's continuous evolution, setting new standards and pushing the boundaries of what is achievable with the novel approach of model merging.

~~~

Welcome aboard Arcee, Charles! We are beyond excited to join forces with you and continue propelling mergekit to the forefront of language model innovation.