Distilling LLMs with Compact, Powerful Models for Everyone: Introducing DistillKit by Arcee AI

First, Arcee AI revolutionized Small Language Models (SLMs) with Model Merging and the open-source repo MergeKit. Today we bring you another leap forward in the creation and distribution of SLMs with an open soure tool we're calling DistillKit.

At Arcee AI, we're on a mission to make artificial intelligence more accessible and efficient. Today, we're thrilled to announce the release of DistillKit, our new open-source tool that's set to change how we at Arcee AI create and distribute Small Language Models (SLMs).

What is DistillKit?

DistillKit is our open-source project focused on something called "model distillation."

Think of it like this: we have a big, smart model (let's call it the teacher) that knows a lot but requires a lot of resources to run. What we want is a smaller model (the student) that can learn most of what the big model knows, but can run on your laptop or phone.

That's what DistillKit does – it helps create smaller models that are powerful like the big ones, but need much less computing power. This means more people can use advanced models in more places.

How DistillKit Works: Teaching a Smaller Model

We're using two main methods in DistillKit to transfer knowledge from the big AI to the smaller one:

- Logit-based Distillation

This method is like having the big model show its work to the smaller model. The smaller AI doesn't just learn the right answers – it also learns how confident the big model is about different possible answers. This helps the smaller model think more like the bigger one. - Hidden States-based Distillation

This approach is about teaching the smaller model to understand information in a similar way to the big model. It's like teaching someone to fish instead of just giving them a fish – we're helping the smaller model learn the thinking process, not just the final answers.

Both methods aim to create smaller models that are much more capable than they would be otherwise.

What's in DistillKit?

Our first release of DistillKit includes:

- Supervised Fine-Tuning with Distillation: We've combined traditional AI training techniques with our new distillation methods to make the smaller models even smarter.

- Experimental Results: We've run lots of tests to show how well our methods work (more on this later!).

- Open-Source Access: We've made all of this freely available for researchers and developers to use and improve.

In future versions, we plan to add even more advanced techniques to make the distillation process even better.

Our Experiments: Putting DistillKit to the Test

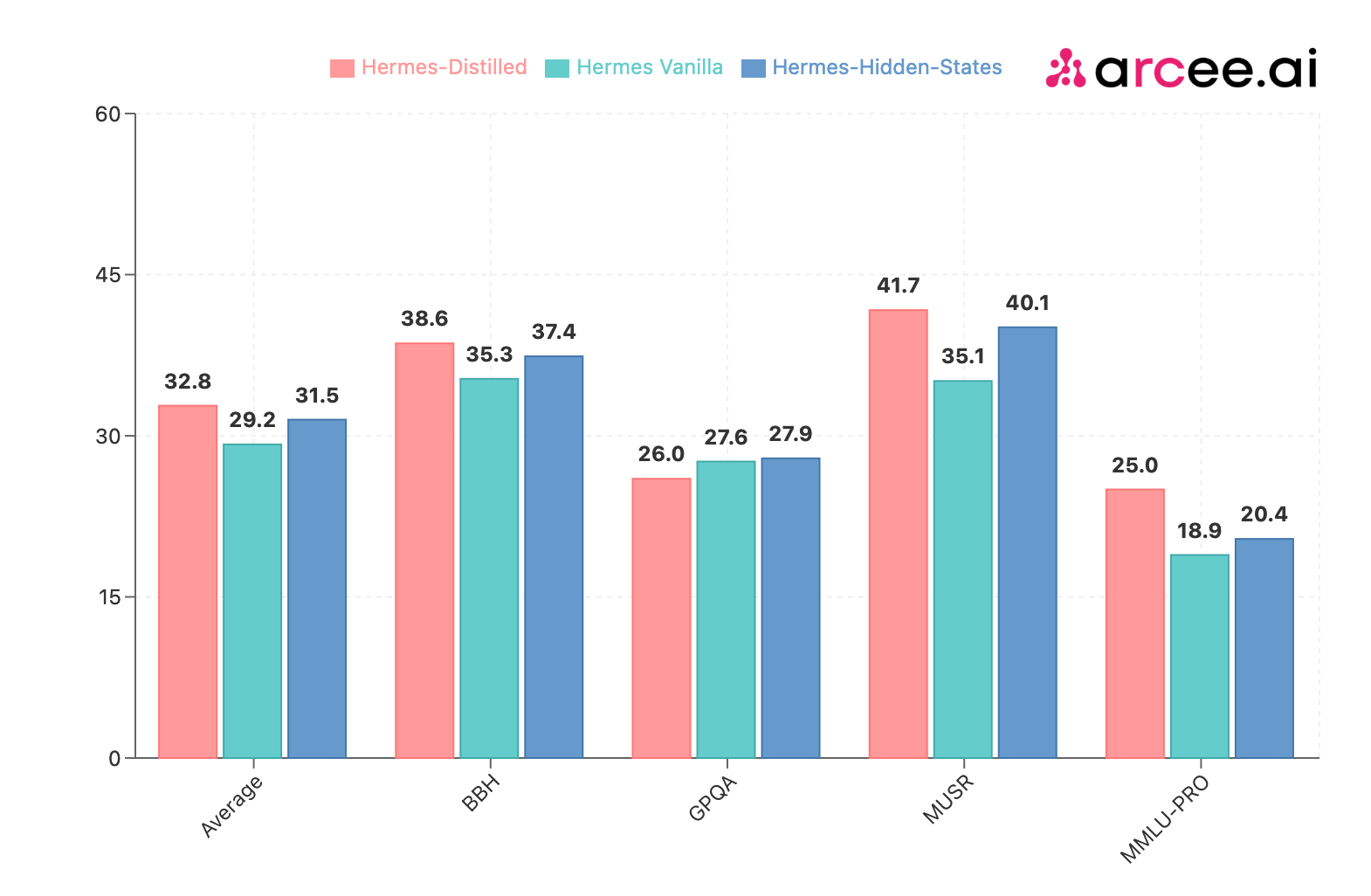

We ran several experiments to see how well DistillKit works:

- Choosing a Teacher: We used our own powerful AI model, Arcee-Spark, as the teacher – it's great at lots of different tasks.

- Training Different Types of Students: We taught both general-purpose models and ones designed for specific types of instructions.

- Comparing Distillation Techniques: We tested both of our distillation methods to see which works better in different situations.

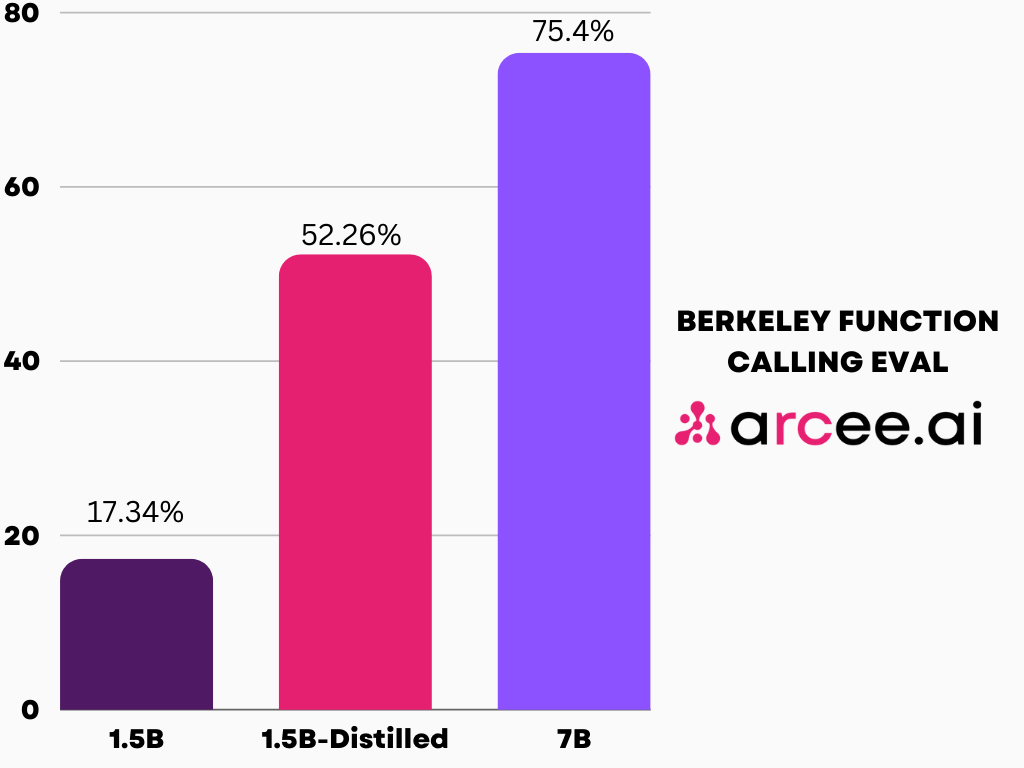

- Specializing in Specific Tasks: We also tried creating a smaller model that's really good at one specific thing – in this case, understanding how to use different functions or tools.

Why DistillKit Matters

We believe DistillKit could have a big impact on how AI is used:

- AI for Everyone: By making it possible to create smaller, efficient models, more people and businesses can use advanced models' capabilities.

- Saving Energy and Money: Smaller models need less computing power, which means they use less energy and cost less to run.

- Better Privacy and Security: With smaller models, it's more feasible to run them directly on your device instead of sending data to the cloud.

- Specialized AI Assistants: We can create small models that are experts in specific fields or tasks.

What's Next for DistillKit?

We have big plans for the future:

- Community Collaboration: We're inviting researchers and developers to use DistillKit and help us improve it.

- More Features: Future versions will include new and improved ways to create efficient AIs.

- Tackling Bigger Challenges: We're working on ways to apply our techniques to even larger AI models.

Evaluations

Arcee-Labs

This release marks the debut of Arcee-Labs, a division of Arcee AI dedicated to accelerating open-source research. Our mission is to rapidly deploy resources, models, and research findings to empower both Arcee AI and the wider community.

In an era of increasingly frequent breakthroughs in LLM research, models, and techniques, we recognize the need for agility and adaptability. Through our efforts, we strive to significantly contribute to the advancement of open-source AI technology and support the community in keeping pace with these rapid developments.

Conclusion

At Arcee.AI, we believe that the future of AI isn't just about creating bigger and smarter models – it's about making advanced AI available to everyone. DistillKit is our contribution to this goal, helping to create a future where powerful models are both practical and accessible.

We're excited to see how researchers and developers will use DistillKit to push the boundaries of what's possible with smaller models. Stay tuned for more updates as we continue this journey!